October 16, 2008

School drill during a simulated alert in Texas

School drill during a simulated alert in Texas

Readers of Inside GNSS may remember these words, uttered solemnly over their TV or radio in the 1950s and ’60s: “This is a test of the Emergency Broadcast System. This is only a test. . . .” This was followed by a piercing pilot tone, which, for me, is firmly etched in childhood memory along with tornado warnings (Midwestern upbringing) and school evacuation drills.

Readers of Inside GNSS may remember these words, uttered solemnly over their TV or radio in the 1950s and ’60s: “This is a test of the Emergency Broadcast System. This is only a test. . . .” This was followed by a piercing pilot tone, which, for me, is firmly etched in childhood memory along with tornado warnings (Midwestern upbringing) and school evacuation drills.

What was known then as the Emergency Broadcast System lives on today as the Emergency Alert System (EAS), and is designed to act as a national warning system in case of major public emergencies.

Today, as media consumption patterns change, as connected mobile devices such as cell phones and PDAs become near ubiquitous, and as the lessons of recent disasters — both man-made and natural — take root, the future shape of the EAS is now being defined. To wit: on June 26, 2006, President Bush signed an executive order directing the Department of Homeland Security (DHS) to create a comprehensive Public Alert and Warning System for the United States. DHS is to make its recommendations to the White House within 90 days.

This article provides a brief summary of the history of the EAS as well as the context in which the President’s Executive Order was issued, such as other regulatory and agency efforts, and further examines potential features that could be included in an Enhanced Emergency Alert System.

(Basic information on the EAS can be found at <http://www.fcc.gov/cgb/consumerfacts/eas.html>.) It will go on to suggest how location technologies can create a rich technological complement that makes EAS even more robust.

“Had There Been an Actual Emergency . . .”

The Emergency Alert System has its beginnings in the Cold War, when President Truman created CONELRAD (Control of Electromagnetic Radiation) in 1951 as a means to rapidly communicate with the general public during times of emergency.

In 1963, President Kennedy replaced CONELRAD with the Emergency Broadcast System and added state and local broadcast capability. In 1994, the Federal Communications Commission expanded the Emergency Broadcast System to include analog cable systems and, in doing so, renamed the system the Emergency Alert System.

The EAS is designed so that the president may issue a message within 10 minutes from any location. Participating systems must interrupt programming in process to transmit this presidential message. Messages are disseminated in a hierarchy, first to “Primary Entry Point” stations, and then down to EAS participating stations.

As one might guess by the Cold War context in which the EAS was first conceived, it was initially designed as a means to respond to national threats, such as nuclear attack. However, the EAS has seen practical application as a state and local alert mechanism, for example, to disseminate information regarding serious inclement weather.

In light of the immediate physical impact of era-defining disasters such as 9/11 or Hurricane Katrina, or annual cyclical events such as tornadoes or hurricanes, we can safely say that most emergency events are local or regional in footprint. (Certainly Hurricane Katrina and 9/11 were national events in terms of long-term impact, both financial and emotional. Further, Katrina led to a diaspora that was also national in impact.)

At the time of this writing, the EAS comprises analog AM and FM stations, analog broadcast television stations, and analog cable stations. Digital television, digital audio broadcast (DAB), digital cable and satellite radio systems begin EAS participation on December 31, 2006. Direct broadcast satellite (DBS) services will start participation on May 31, 2007.

But That’s Not All

The Executive Order comes on the heels of multiple parallel efforts. In August 2004, the Federal Communications Commission (FCC) issued a notice of proposed rule-making (NPRM) that began a review of the EAS <http://www.fcc.gov/eb/Orders/2004/FCC-04-189A1.html>.

At the time, the review was largely motivated by changes in media consumption patterns. The Commission noted growing use of new media, such as satellite radio and DBS services. For example, the FCC noted that as of June 2005, DBS services reached an estimated 25 percent of TV households, or roughly 28 million households, but the DBS broadcasters did not have any EAS obligations. The FCC moved to rectify this and will broaden the EAS as described above.

In November 2005, in the wake of the Gulf State hurricanes of 2005, the FCC issued an order and follow-on NPRM, which, in addition to incorporating the findings of the first NPRM, also acknowledged shortcomings in how the EAS was applied during Hurricane Katrina. With the NPRM, the FCC also solicited comment on how the EAS could be improved.

As of this writing, much of the discussion revolves around whether the EAS should be expanded to include cell phones and, if so, how to extend EAS coverage while preserving the robustness of TV and radio-based alerts. SMS, a popular cellular messaging technique, has its constraints.

For instance, the amount of text that can be disseminated is only 160 characters. Moreover, SMS is inherently a lousy medium in which messages, quite simply, are sometimes lost. Additionally, SMS carrier networks may be bogged down if mass alerts are sent out, and cell sites are susceptible to power outage, as shown after Katrina and during the power outage of 2003.

The FCC shares purview over the EAS with the Federal Emergency Management Agency (FEMA) and the National Weather Service (NWS). In October 2004, FEMA announced its Digital Emergency Alert System (DEAS) pilot, conducted in collaboration with the Association of Public Television Stations (APTS).

Phase I of the pilot involved a demonstration in the National Capitol Region of how datacasting over digital television broadcasts could deliver enhanced emergency alerts. For example, text crawls could be replaced with full audio and video alerts. A demonstration system was shown at the National Association of Broadcasters convention in April 2005.

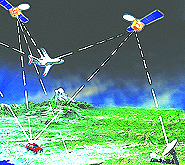

Phase II expanded the pilot to stations outside of the Capitol Region, and began the work of integrating DTV-based capabilities with other warning and transport systems, such as satellite. For example, Emergency Alerts could first be uplinked to satellite, enabling broad national dissemination even if terrestrial infrastructure is impaired, and then received at digital TV stations, which could then retransmit over their spectrum to local TV sets.

In Congress, in September 2005, Senator Jim DeMint (R-SC) introduced the Warning, Alert, and Response Network (WARN; S.1753) Act into the Senate, also known as the National Alert System and Tsunami Preparedness Act, as a means of establishing a national hazard alert system.

In June 2006, Representative John Shimkus (R-IL) introduced a comparable bill into the House of Representatives. S.1753 notes that a multitude of media should be used so as to maximize dissemination and minimize the risk of having a single point of failure. This has been a goal of the FCC’s review as well.

Suffice it to say, the Emergency Alert System, and the overarching issue of comprehensive, integrated public warning, are hot topics these days.

Time for More

The move to broaden the EAS to new media distribution platforms seems timely and appropriate given the change in media consumption patterns. Households may get their information the old-fashioned way — through over-the-air TV to a fixed TV set — or through some combination of media, such as cable, satellite radio, and the Internet.

In addition to “how” people consume information, another important attribute is “where” people consume information. Today, media viewing is not necessarily a static experience. Device users may be mobile, such as on foot or in a car, or at least “nomadic”, that is, in a fixed location that is neither home nor office. The laptop-toting businesswoman next to you in the airport may view the news in a hotspot café, or at a hotel, or through a cellphone.

Even so-called “legacy” media such as television are adapting to a more mobile use scenario. The Advanced Television Standards Committee (ATSC), an industry committee with purview over the digital TV standard used in the United States, is testing a format adapted for reception by mobile TV-capable devices. Tests have shown reception is possible at train-level speeds.

New mobile TV services, such as MobiTV (available on Sprint and Cingular), or Qualcomm’s MediaFLO (to be launched in 2006 on Verizon), or Modeo (DVB-H broadcasts to be launched in 2007) are targeting mobile handhelds. Informa estimates that some 120 million TV-capable devices will be in service by 2010.

Further, devices equipped with TV tuners are being embedded in automobiles. A car on an evacuation route could receive EAS information while (quite logically) heading away from a home in a storm’s path.

In sum, television itself is becoming a mobile experience and, perhaps as importantly, a battery-powered experience. The ability to receive TV-like service without a wall plug is a clear benefit, given that electricity may go out during disasters.

During Katrina, for example, not only did power go out, but cellular networks and the public switched telephone network were also knocked out. Portions of the TV and FM infrastructure stayed on the air, leading to a commendation to the National Association of Broadcasters by President Bush. In the end, the broadcast infrastructure was shown to be robust.

Katrina’s Not Alone

Immediately in the aftermath of Katrina, FCC Chairman Kevin Martin established an independent panel to review the Impact of Hurricane Katrina on Communications Networks. The FCC has issued an NPRM to review the panel’s recommendations.

Although the issue of how emergency alerts can be received is important, a second issue is how public agencies implement EAS. While the NWS did send severe weather warnings over the EAS, state and local officials did not activate the Emergency Alert System to send emergency evacuation information before Katrina’s landfall. Going forward, however, these officials will probably be more aggressive in using the EAS.

A major fundamental, technical issue is that of power. For example, TV and FM broadcasters typically have backup power available for business reasons — loss of service means loss of revenue.

In contrast, cell sites, which are usually in a much denser network than TV stations and repeater sites, often do not have backup power. This points to the quality of service that can be expected from each medium. However, even broadcasters faced power constraints in the wake of Katrina. Moreover, bringing fuel for the backup generators at TV stations proved difficult due to the prolonged flooding.

One recommendation, then, is to apply standards for the duration of backup power supply and to create supply routes in advance of disaster. Although the independent FCC panel analyzing Katrina’s impact noted that fuel supplies did run out in some cases, it only recommended “checklists” for emergency preparedness and did not go as far as to recommend standards for backup fuel. In the wake of 9/11, industry formed the Media Security and Reliability Council, which made similar recommendations. At this point, checklists would not seem to be enough.

Going forward, what disasters might be faced? This article was written in part in San Francisco, home to multiple potential terrorist targets, and which is also adjacent to Silicon Valley, one of America’s most influential clusters of innovation. (Parts of it were also written on an airplane, immediately in the wake of the averted London terrorist plot.) The San Francisco Bay Area is also adjacent to two major tectonic faults.

Looking back at the impact of the Northridge and Loma Prieta earthquakes, hurricanes are obviously not the only potential natural disaster that America faces. Further, while in the end the impact of SARS was minimal, it gave ample evidence of how a “Patient Zero” could easily communicate infectious disease across borders. While SARS emanated from Southeast China, it made its way to Taiwan, Hong Kong, and even Toronto.

A Complementary Solution

What other problems could the EAS solve? The EAS currently is a platform for one-way dissemination of information. It lacks knowledge of receipt or a feedback loop. Could connected devices acknowledge receipt back to a centralized database? Could devices pre-registered as “high priority”, such as those belonging to law enforcement, receive higher tiers of alerts?

From the perspective of position location and geographic information systems, the value of location-aware EAS receivers is apparent. Cellular devices can be coarsely located through cell site-based location technologies, and could thus receive “Reverse 911” alerts. Position location through GPS or other means could be polled with specific, geo-tagged instructions. Location-aware handsets could then acknowledge or ignore alerts as appropriate.

TV itself can be used for position location (as the author’s company does), and it can also be used in areas where conventional satellite-based positioning solutions are not effective. This would address the Presidential Decision Directive of December 2004 on Positioning, Navigation, and Timing, which noted the need for augmentations to the GPS to improve system integrity, availability, and accuracy. GPS’ shortcomings indoors and in urban settings — the most likely targets of terrorist attack — are well-known.

Conversely, the broadcast infrastructure is well-correlated with urban centers, making it an ideal complement to GNSS coverage in open and rural areas. Further, the low frequency and high power of TV signals make it effective for indoor use, which benefits both position location and communication.

Potential applications include positioning of and communications to first responders; geo-targeted alerts, such as weather warnings or Amber Alerts; tracking of hazardous material; tracking of vehicles or cargo; even patient triage. Rescue agencies have noted that one major hurdle they face in disaster settings is tracking their own assets, such as rental cars.

In addition, as evidenced by the APTS-FEMA trial, TV has the bandwidth to disseminate the audio and video information.

In sum, TV provides a pre-built, robust infrastructure capable of supporting location-rich Emergency Alerts, and complements the three major functions of GPS – positioning, navigation, and timing. Wedding the EAS with PNT seems an intuitive way to kill two birds with one stone.

By

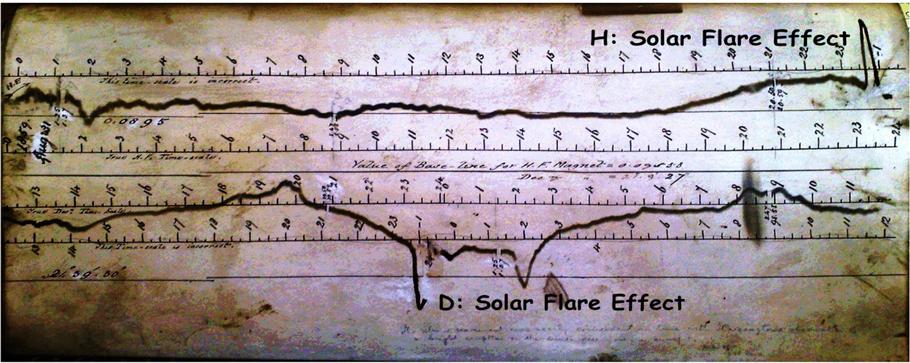

One of 12 magnetograms recorded at Greenwich Observatory during the Great Geomagnetic Storm of 1859

One of 12 magnetograms recorded at Greenwich Observatory during the Great Geomagnetic Storm of 1859 1996 soccer game in the Midwest, (Rick Dikeman image)

1996 soccer game in the Midwest, (Rick Dikeman image)

Nouméa ground station after the flood

Nouméa ground station after the flood A pencil and a coffee cup show the size of NASA’s teeny tiny PhoneSat

A pencil and a coffee cup show the size of NASA’s teeny tiny PhoneSat Bonus Hotspot: Naro Tartaruga AUV

Bonus Hotspot: Naro Tartaruga AUV

Pacific lamprey spawning (photo by Jeremy Monroe, Fresh Waters Illustrated)

Pacific lamprey spawning (photo by Jeremy Monroe, Fresh Waters Illustrated) “Return of the Bucentaurn to the Molo on Ascension Day”, by (Giovanni Antonio Canal) Canaletto

“Return of the Bucentaurn to the Molo on Ascension Day”, by (Giovanni Antonio Canal) Canaletto The U.S. Naval Observatory Alternate Master Clock at 2nd Space Operations Squadron, Schriever AFB in Colorado. This photo was taken in January, 2006 during the addition of a leap second. The USNO master clocks control GPS timing. They are accurate to within one second every 20 million years (Satellites are so picky! Humans, on the other hand, just want to know if we’re too late for lunch) USAF photo by A1C Jason Ridder.

The U.S. Naval Observatory Alternate Master Clock at 2nd Space Operations Squadron, Schriever AFB in Colorado. This photo was taken in January, 2006 during the addition of a leap second. The USNO master clocks control GPS timing. They are accurate to within one second every 20 million years (Satellites are so picky! Humans, on the other hand, just want to know if we’re too late for lunch) USAF photo by A1C Jason Ridder.  Detail of Compass/ BeiDou2 system diagram

Detail of Compass/ BeiDou2 system diagram Hotspot 6: Beluga A300 600ST

Hotspot 6: Beluga A300 600ST