Critical infrastructure has a compelling need to infer the assurance of PVT estimates—as do users in general. However, traditional PNT platforms do not offer a principled way to infer assurance from multiple anti-spoofing (A-S) techniques, situational awareness (SA) information, and other auxiliary sources such as network data. Here we introduce, a PNT Trust Inference Engine (PNTTING) that can assess PNT trust according to probabilistic models with rigorous semantics.

PNTTING draws from techniques developed in the statistical relational learning and probabilistic programming research communities. For background on these techniques, and for details on the probabilistic bases, definitions and parameters involved in setting up PNTTING, readers are referred to the full technical paper referenced at the end of this article. Here we only briefly mention the main concepts that are of interest to the two communities mentioned and pass quickly to applications and an example that will be most of interest to our PNT audience.

PNTTING operates in conjunction with the PVT computational engine in a PNT platform. A PNTTING-capable platform is a system that facilitates mechanisms for probabilistic inference and computes trust assessments for PVT inputs and outputs using trust assumptions in those inputs.

This trust inference engine can be used in two fundamental ways:

• PNTTING can be used to evaluate the extent to which a PNT platform provides PVT assurance. As such, PNTTING gives a concrete system instantiation that can serve as a reference for implementing several possible configurations of an assured PNT platform.

• PNTTING provides an innovative and principled way to estimate assurance metrics from multiple sources with various degrees of trust during run-time. It allows decisions about how to use sources, and it allows reasoning about how estimates are computed and why.

The engine comprises inputs, processing, and outputs. Inputs include data (e.g., measurements collected while the platform is being used in the field) and probabilistic models and inference tasks written by assurance model designers. Inference tasks describe conditional probability queries and maximum a posteriori (MAP) queries related to assessing assurance, subject to a set of probabilistic models.

Another type of input is data transformation programs, which encode descriptions of how to interpret raw measurements including units and other relevant information, as well as how to encode outputs. PNTTING processing requires a probabilistic computing engine that in practice can be instantiated in a variety of implementations depending on the mission of the PNT platform and the access to the various inputs. Finally, outputs include both posterior trust assessments along with assurance metrics and PVT estimates to report to the user.

For a fuller discussion of PNTTING inputs, outputs, computation, terminology such as PNT assurance and related concepts, what we mean by trust, and the basics of probabilistic inference, refer to the full conference paper mentioned at the conclusion.

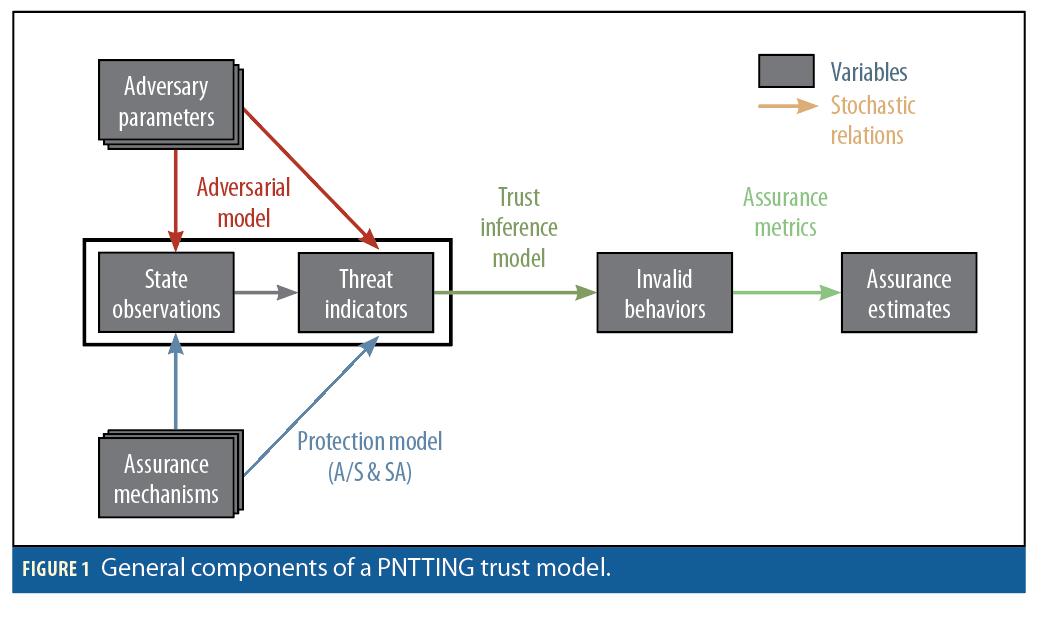

As Figure 1 illustrates, PNTTING trust models describe stochastic relationships between observations and other variables that may be indicators of an attack. Furthermore, these models automate the process of estimating posterior distributions that capture the probability that a PNT platform exhibits (or will exhibit) valid behavior.

Adversary parameters may correspond to one or several families of adversaries that may (or may not) attempt to influence a PVT platform (e.g., an adversary that may spoof GPS signals to pull the PVT solution with one of several profiles). A-S and SA techniques estimate, based on state observations (i.e., pseudoranges, RF signals, or mapping information), continuous or discrete variables that may be indicative of adversarial manipulation. These threat indicators inform our beliefs that valid solutions (with accuracy, integrity, availability, and continuity) are (or will be) produced by the PNT platform. Because beliefs are quantified via probability distributions, these need to be transformed into actual continuous or discrete assurance estimates (numbers or labels) that are meaningful to the end platform user.

Broadly speaking, PNT assurance is defined as the protections needed to secure PNT required capabilities for the end user; these protections could be provided by the whole PNT enterprise. PVT assurance are those protections associated with the PVT computation. These concepts parallel the definitions of cybersecurity. The concept of assured is a measure of how well these protections are achieved.

The associated security goals for PVT assurance are accuracy, integrity, availability, and continuity. These goals vary according to the user and their mission. We list accuracy as a security goal, since accuracy of some methods will manifest in how assured we think those methods are when fused with other information. That said, accuracy of the PVT solution is, of course, the primary validity parameter. Integrity is meant in the security sense that the relevant information has not been altered (and is authentic). Availability and continuity apply to both inputs and outputs.

PNTTING Applications

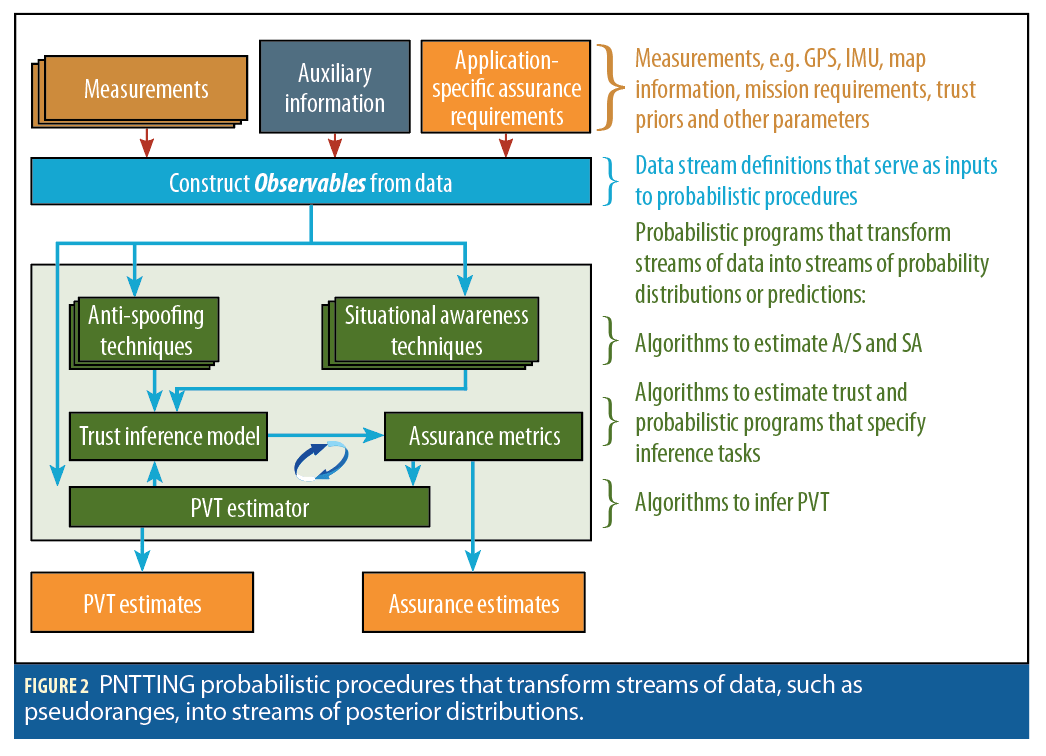

In a programming pattern similar to reactive programming, developers define how to combine data streams using composing functions. These determine what ultimately gets passed to listeners. Thus, in simple terms, developing a PNTTING user application requires developers to define how to process observations; probabilistic models for PVT trust assessments and/or PVT estimates; and, how to convert such measurements into assurance estimates via assurance metrics. Instead of providing traditional functions, PNTTING developers describe how to combine observations via probabilistic procedures. Correspondingly, assurance metric designers specify inference tasks to estimate posterior distributions. We illustrate this approach in Figure 2.

From a programming pattern perspective, reactive programming can be thought of as defining functions whose inputs are data streams and whose outputs are data streams. This analogy can be helpful because the inputs and outputs of PNT platforms can often be thought of as data streams. For example, pseudoranges or IMU (Inertial Measurement Unit) measurements can naturally be described as data streams. Also, periodic position, velocity and time estimates can be described as data streams. However, there are multiple subtle points to keep in mind, as we describe next.

Probabilistic programs describe probability distributions conditioned on observations. These generally transform data streams of probability distributions into data streams of probability distributions. In some cases, data streams will simply contain pseudoranges, IMU measurements, etc. However, in other cases, data streams will consist of sequences of probability distribution objects, which can be sampled.

For example, PNT platforms, such as software defined receivers, produce streams of events, each of which can be represented by a vector that includes pseudoranges, and other information. Such streams can be transformed, e.g., computing a PVT estimate using a probabilistic version of the well-known weighted least squares method. The output of such operator is then a stream of PVT posterior distributions. This new stream can be transformed by yet another operator, turning the stream of PVT posteriors into a stream of trust posterior distributions or predictions.

PNTTING Application Examples

Here we describe PNTTING applications to illustrate the concepts introduced in this article. In particular, we explain how to develop assurance metrics for PVT solutions computed from Multi-GNSS. In contrast to Figure 2, the PVT estimators in these examples are not implemented within the PNTTING Applications. Rather, PVT solutions are computed by an external PVT platform. Furthermore, these examples do not feed trust assessments into the calculation of subsequent PVT estimates (recursively). Our rationale for this simplification is to illustrate how to use PNTTING in combination with an existing PVT estimator.

Assured PVT using Auxiliary Information

While A/S and SA techniques usually refer to techniques that are applied to input data or signals to a PVT algorithm, this example illustrates a broader view of SA. Namely, this example illustrates how one could use mapping information and multiple PVTs to infer integrity and accuracy assurance.

Consider a scenario where two PVTs are computed from a single Multi-GNSS platform. One PVT solution uses GPS signals and the other uses Galileo and GLONASS signals. Furthermore, the PNT platform in this scenario is in a vehicle moving on a road within a region for which mapping information is available.

PNTTING would allow us to encode the assumptions that the elevation of a PVT is consistent with the elevation of a point on the map with the corresponding latitude and longitude. Also, when information about roads (i.e., a set of points close to a given latitude-longitude pair) is available, we can encode the assumption that when the PNT platform is on the road the distance to the nearest road point on the map is probably close. Evidence of inconsistencies with elevation and road information can be used to inform our belief about the integrity of the PVT solution.

Multiple PVTs would allow us to reason about loss in accuracy. Concretely, if we assume that (in a given region) the two PVT solutions in this example diverge according to a known distribution, then evidence of an inconsistent difference can inform our belief that our PVT solution is accurate.

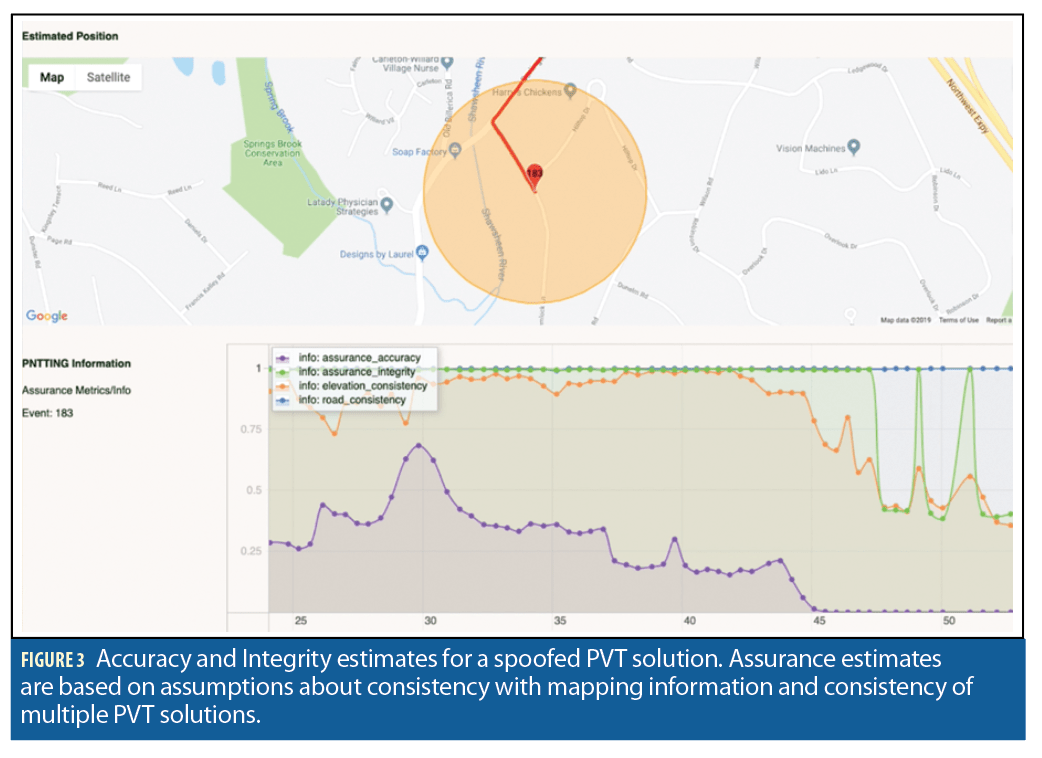

As we illustrate in Figure 3, these assumptions yield the specification of two assurance metrics. Concretely, the posterior trust assessments can be transformed via assurance metrics to numbers in the [0,1] interval as well as a color (on a red to green scale) that reflects integrity and the radius of a circle around the PVT estimate that reflects.

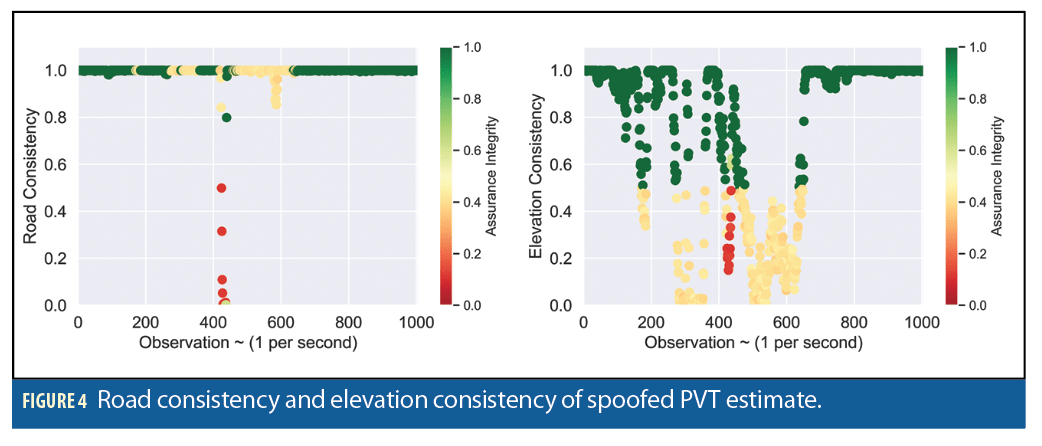

Note that this particular model reflects the belief that both elevation and road information must be inconsistent to infer integrity assurance below 0.2. However, discrepancy in elevation alone can contribute to reducing assurance integrity around 0.5. The spoofed solutions in Figure 4 remained on the road. Therefore, the road consistency remained high.

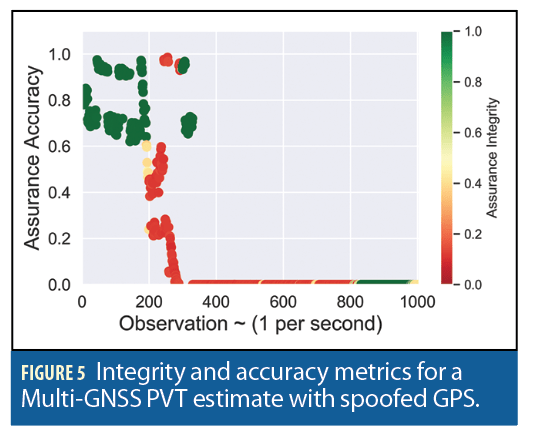

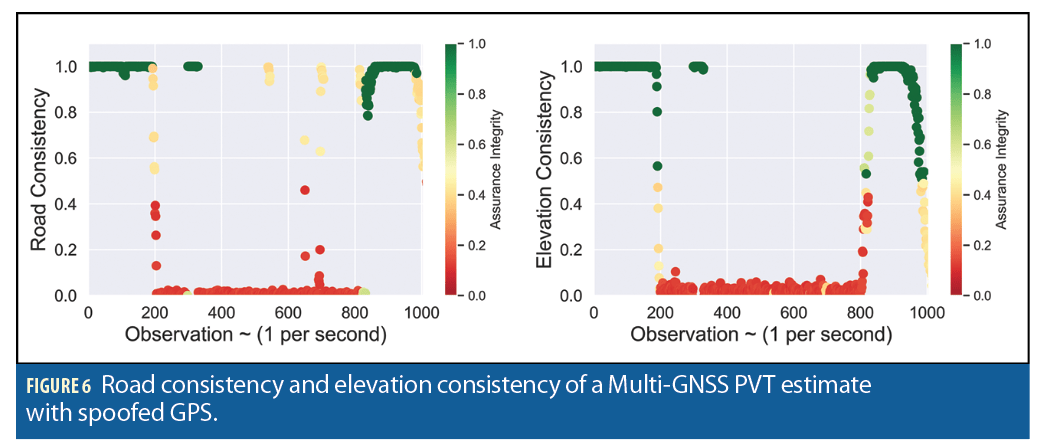

If we apply the same trust model to a PVT solution that is computed with all GPS, Galileo, and GLONASS, then the assurance metrics change, as we illustrate in Figure 5 and Figure 6.

Assured PVT via Anti-Spoofing Information

PNTTING can be used to implement a user application that produces PVT assurance estimates for PVT estimates computed traditionally from GPS messages using a weighted least squares (WLS) estimator. Trust assessments can be informed by multiple A-S and SA techniques. For example, consider the following A-S and SA information. A-S: A position monitor, which estimates of the posterior probability that a set of observations matches an assumed prior distribution of position deltas; a velocity monitor, which estimates of the posterior probability that a set of observations matches an assumed prior distribution of velocity deltas; a clock monitor, which estimates of the posterior probability that the code-carrier divergence and the clock rates of observations match assumed prior distributions for these two features. SA: A power monitor, which estimates of the posterior probability that the power measurements of all of the satellite signals in an observation are consistent with a prior distribution.

A-S and SA information can be fed to a probabilistic trust inference algorithm as inputs (e.g., information precomputed by a PNT platform) or can be estimated as part of a PNTTING model. The former is more appropriate when A-S or SA information is precomputed outside the PNTTING application and is passed as traditional input. However, in some cases it is more appropriate to compute A-S or SA estimates within the PNTTING Application. PNTTING probabilistic procedures are more powerful because these estimate probability distributions, which can be combined with other probability distributions using the calculus of probabilities.

Trust inference algorithms combine multiple A-S and S/A assessments according to trust assumptions and application-specific assurance requirements. For the purposes of illustrating how to specify PNTTING trust inference models, we wish to compute PVT assurance metrics that reflect our confidence in our belief that a PVT solution was:

• computed in the presence of no adversary (scenario_normal);

• manipulated to alter the position and velocity estimate (scenario_pv); or,

• manipulated to alter the clock bias and clock rate (scenario_clock). In this case, our trust inference model can be described using an influence diagram.

Knowing the prior distributions of the random variables power, position, speed, clock rate and CCD (corresponding to the five monitors we described above) given an attack scenario, and prior assumptions about the probability of a scenario, we can estimate a posterior distribution of a scenario given A-S and SA information (decision node in the influence diagram). Then we can map these decisions (predictions) to concrete assurance metrics (value node in the influence diagram) that can also take into account assurance requirements.

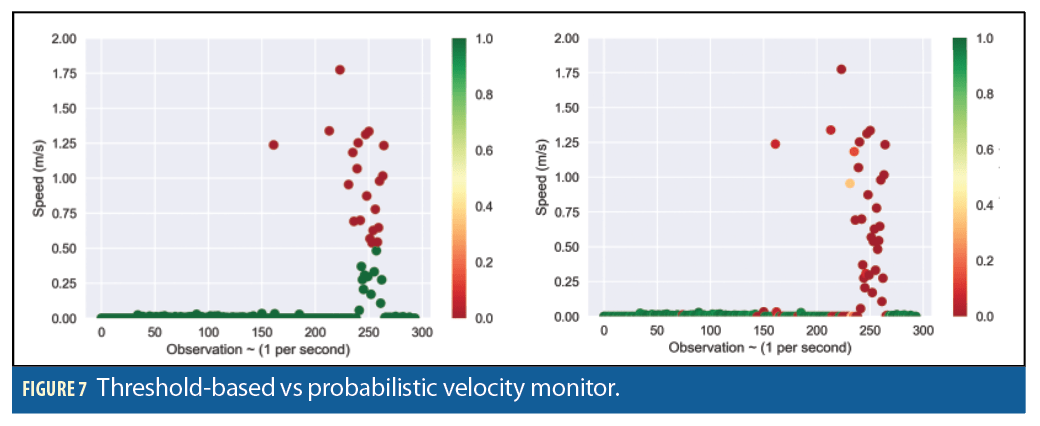

PNTTING allows us to simply describe more robust A-S and SA techniques than those that are only based on thresholds. Consider one of the scenarios designed to manipulate the position and velocity of the solution. Figure 7 shows that we can model a velocity monitor, by reasoning from observed A/S information (left) or by estimating such information (right). That is, we can use a Boolean fault, or we can estimate the probability that the observations in a window match the assumed prior for speed, in this case.

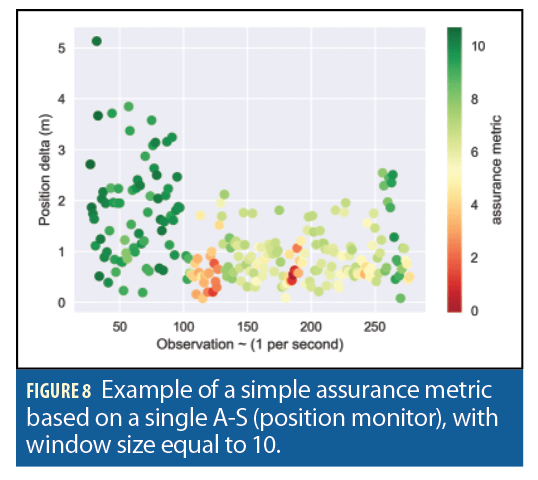

Note that the monitor as a PNTTING probabilistic procedure is more robust. The probabilistic velocity monitor detects the attack in a timely manner, and, because the assessments are probability distributions, we can use them to estimate our confidence in such assessments. For example, Figure 8 illustrates one way to return a numeric value between 0 and 10 that corresponds to our confidence in the truthfulness of the solution based on the corresponding assurance mechanism.

Threshold monitors fail to capture the nature of how observations differ from our assumed priors. In fact, Figure 8 shows the performance of a probabilistic position monitor for a static scenario. In this scenario a threshold-based monitor would fail to detect the attack. This is because, during the attack, the position delta was actually abnormally low, rather than abnormally high. Therefore, the threshold-based faults would not be raised. Thus, instead of using thresholds, our approach consists of asking the question: What is the probability that a set of N observed position deltas (resp. velocity deltas) are drawn from the assumed prior distribution for that statistic?

This approach is very powerful. First, we just illustrated that threshold-based monitors allow too much flexibility to an attacker. Observations far from regular trigger similar faults to those slightly over the threshold. Second, our approach can be easily extended to query for this posterior probability with 10, 50, or any number of observations instead of the last 4. This is particularly powerful when dealing with adversaries that will attempt to manipulate a solution in stealthy mode over a longer period of time. Finally, outputs of queries are approximate probability distributions that can yield multiple assurance metrics.

Related Work

PNTTING categorically differs from specific sensor fusion approaches because PNTTING is an architecture designed to allow model updates to accommodate changes in the environment and application. This is particularly important because sources of information are not always available, and threats change depending on the context and mission.

Conclusion and Future Work

Our description of the PNTTING architecture is just the first step in realizing the potential of PNTTING to serve both for system assessment and operational assurance evaluation. To go forward, several directions need to be explored:

Leveraging and/or creation of realistic test vectors. There is a paucity of test vectors for PNT assurance, and examples are needed that exercise all layers of the PNT platform. For example, text vectors could be at the raw sensor measurement level, at the feature extraction level, at the higher PVT event level, etc. PNTTING would need to adjust based on the nature of the inputs, but the issue is that to demonstration such adjustments, the input data (and a wide choice of such data) is needed.

Modeling of trust assumptions, including interdependencies. PNTTING requires trust assumption models, which include prior information about sensor monitors and so forth (as the examples in Section 6 illustrated). The interdependencies between these monitors need to be also modeled, which requires a computation (done using its own test data, etc.) separate from PNTTING.

Exploration of computational implementations. Our examples used Anglican, and we discussed the possibilities of other computational frameworks. The research in such frameworks is ongoing, and PNTTING will need to continually re-evaluate the best way, probably PNT platform dependent, to performs its calculations.

Integration with existing PNT platforms. PNTTING is being developed using pre-made test scenarios, which are adequate for development, and even for some types of system assessments. But using PNTTING for run-time assurance metric generation will require integration with a PNT platform, an open architecture such as software-defined radio.

Acknowledgments

The authors acknowledge the many fruitful discussions with a wide range of MITRE colleagues, and especially the IPA (Inferring PNT Assurance) team, Dr. David Stein and Dr. Warner Warren. We also thank Joshua Guttman, Perry Loveridge, Erik Lundberg, and Nolan Poulin for reviewing this manuscript.

This magazine article presents only a portion of the full technical paper, which contains discussion of many probabilistic concepts and terms underpinning the PNTTING. For that full discussion, see “Positioning, Navigation, and Timing Trust Inference Engine,” ION/ ITM 2020. Approved for Public Release; Distribution Unlimited. Public Release Case Number 19-3629, The MITRE Corporation.

Authors

Dr. Andres Molina-Markham is a Lead Cyber Security Researcher in the Trust and Assurance Cyber Technologies Department. His research focuses on developing novel attacks and defenses for AI-enabled systems, as well as metrics that evaluate how well a set of defense mechanisms protect specific aspects of an AI-enabled system.

Dr. Joseph J. Rushanan is a Principal Mathematician in the Communications, SIGINT, & PNT Department. He has worked on GPS for over 20 years, including as part of the M-code Signal Design Team and the L1C Signal Design Team, and was the 2019 recipient of ION’s Captain P.V.H. Weems Award. He also teaches cryptography for the Cybersecurity program at Northeastern University’s Khoury College of Computer Sciences.