The concept of autonomous driving has generated a lot of interest and attention in the past decades as it is believed to provide numerous benefits for individuals and society: increased road safety, reduced traffic congestion, accidents and death, and saving time and pollution on commuting. There is a lot of ongoing work on this topic, much research and many experiments have been conducted on how to make cars learn the environment, make human-like decisions and drive on their own.

DI QUI, Polaris Wireless

A: Today, we see a lot of self-driving vehicles experimenting on the roads in a controlled environment under the supervision of a human driver in good road and environment conditions. Researchers forecast that by 2025 [1], there will be approximately 8 million autonomous or automated vehicles on the road. Before merging onto roadways, self-driving cars will first have to progress through six levels of driver assistance technology advancements.

The Society of Automotive Engineers (SAE) defines six levels of driving automation ranging from 0 to 5 [2]. These levels have been adopted by the U.S. Department of Transportation (DoT).

Level 0—No Driving Automation

Today, most vehicles on the road are at this level and manually controlled. The human provides the dynamic driving task and there are systems in place to help the driver. Information sources that include GNSS, vehicle motion sensors, and road maps are integrated using an information fusion algorithm. Such map-matched information together with traffic situation information are provided to the human driver for assistance and guidance. An example of Level 0 automation would be the emergency braking system.

Level 1—Driver Assistance

Being the lowest level of automation, the vehicle features a single automated system for driver assistance, such as steering, accelerating or cruise control. Adaptive cruise control, where the vehicle can be kept at a safe distance from the surrounding cars, qualifies as Level 1 automation. The human driver monitors and controls the other aspects of driving.

Level 1 automation and beyond uses a multisensory platform that includes camera, radar, LiDAR, GNSS, inertial measurement units (IMUs) and inertial navigation systems (INS) and ultrasonic sensors. Ultrasonic sensors are available and widespread for parking but they are of minor importance for autonomous driving. LiDAR systems today are rarely used in serial production because of cost and availability. Camera and radar are prerequisites for all further levels of automation.

Level 2—Partial Driving Automation

Vehicles with Advanced Driver Assistance Systems (ADAS) can control both steering and accelerating/decelerating. At this level, a human driver sits in the driver’s seat and can take control of the car at any time. Examples of this level of automation include Tesla Autopilot and Cadillac (General Motors) Super Cruise systems.

Absolute localization is computed from GNSS, PPP or RTK, IMU and odometry. Perception sensors like cameras, radar, and/or LiDAR are used to obtain relative localization. Both absolute and relative localization outputs together with path planning are integrated for steering control, acceleration and brake control.

Level 3—Conditional Driving Automation

Level 3 vehicles have environmental detection capabilities and can make informed decisions, such as assisting with an emergency stop by decelerating and stopping the vehicle while alerting surrounding cars. Human override is still required at this level. The driver must remain alert and ready to take control if the system is unable to execute the task.

A feature such as traffic jam pilot is a good example of Level 3 automation. The system handles all acceleration, steering and braking while the human driver can sit back and relax. Starting from Level 2 and beyond, real-time high-precision GNSS absolute positioning solutions are required to achieve lane-determination with or without a local base station network. Sub-lane level accuracy unlocks many autonomous driving features, enables planning beyond perception limits, thus improving system integrity and safety assurance [3].

In 2017, Audi announced the world’s first production Level 3 vehicle, Audi A8, which features Traffic Jam Pilot [4]. However, the A8 is still classified as a Level 2 vehicle in the United States because the U.S. regulatory process shifted from federal guidance to state-dictated mandates.

The vehicle retains the same architectures that was compatible with Level 3 autonomy but lacks the redundancy systems to completely take over driving duties. Mercedes-Benz has become the first automotive company in the world to meet the necessary requirements (granted by UN-R157) for international approval of Level 3 automation [5]. The Mercedes-Benz Drive Smart Level 3 autonomous driving feature will become available in Europe early this year. The road-legal certification in the U.S. has not been finalized yet.

Level 4—High Driving Automation

The key difference between Level 3 and Level 4 automation is Level 4 vehicles can intervene if there is a system failure. The vehicles do not require human interaction in most circumstances. A human driver still has the option to manually override. Until legislation and infrastructure evolve, self-driving mode can only be used in limited areas, such as geofence-defined areas.

High-accuracy maps and highly reliable localization are critical to achieving a Level 4 system. Localization failures usually trigger an emergency stop. Current Level 4 systems use LiDAR, cameras and radar for both perception and localization, primarily relying on LiDAR for localization. GNSS is not the primary location sensor because of availability and integrity challenges.

According to the German Transportation Ministry [6], autonomous vehicles and driverless buses are set to make their debut on German public roads after lawmakers approved a new law on autonomous driving in June 2021.

The law intends to bring autonomous vehicles at the SAE level 4 into regular operation in early 2022. More and more companies are showing promise with Level 4 automation. Waymo has partnered and collaborated with truck builder Daimler to develop a scalable autonomous truck platform that is intended to support SAE Level 4 [6].

Level 5–Full Driving Automation

Level 5 vehicles do not require human attention; dynamic driving task is eliminated. Level 5 cars will not have steering wheels or acceleration/braking pedals. They will be free from geofencing and are able to go anywhere an experienced human driver can. Fully autonomous vehicles are still undergoing testing, with none available to the general public yet.

For a long time, the SAE standard of 5 levels of autonomous driving has been the norm. However, Germany’s Federal Highway Research Institute presented an alternative and suggested switching from 5 levels to 3 modes to simplify the discussion on autonomous vehicles [7]. They are:

• Assisted mode—The first mode combines SAE Level 1 and 2, where the human driver is supported by the vehicle but must remain alert and ready to intervene at all times.

• Automated mode—The second mode incorporates SAE Level 3. The human driver temporarily hands over the steering wheel to the software/computer and can perform other activities for a more extended period.

• Autonomous mode—The third mode combines the SAE Level 4 and 5. The vehicle has the complete driving authority.

What are the challenges of autonomous vehicles?

Many people are still curious about how to design an autonomous or driverless vehicle system that is capable of handling a vehicle’s performance like a human in all possible conditions. An autonomous vehicle is a combination of sensors and actuators, sophisticated algorithms, and powerful processors to execute software. There are hundreds of such sensors and actuators that are situated in various parts of the vehicle, driven by a highly sophisticated system.

There are different categories of sensory systems in autonomous vehicles: 1) navigation and guidance sensors to determine where you are and how to get to a destination; 2) driving and safety sensors like cameras to make sure the vehicle acts properly under all circumstances and follow the rules of the road; and 3) performance sensors to manage the vehicle’s internal systems, such as power control, overall consumption and thermal dissipation.

While autonomous vehicle systems may vary slightly from one to another, the core software generally includes localization, perception, planning and control. A perception system senses, understands and builds a full awareness of the environment and the objects around it using cameras, LiDAR and radar sensors. A planning software is responsible for path planning, risk evaluation, task management and path generation. Machine learning (ML) and deep learning (DL) techniques are widely used for localization and mapping, sensor fusion and scene comprehension, navigation and movement planning, evaluation of a driver’s state and recognition of a driver’s behavior patterns, and intelligence learning for perception and planning. A mapping software can be used to generate and update high definition lane-level map data through collected sensor data and posting processing.

Sensor calibration serves as a foundation for an autonomous system and its sensors. It is a requisite step before implementation of sensor fusion, localization and mapping, and control. Sensor calibration learns about each sensor’s position and orientation in real-world coordinates by comparing the relative positions of known features the sensors detect.

Sensor fusion is one of the essential tasks that integrates information obtained from multiple sensors to detect outliers and reduce the uncertainties from data from each individual sensor, thus enhancing accuracy, reliability and robustness. There are three levels of fusion approaches: high-level/decision level, mid-level/feature level, and low-level/raw data level for both perception and localization systems [9].

In decision-level fusion, each sensor carries out detection or tracking algorithms separately and subsequently combines the results into one global decision. Feature-level fusion extracts contextual descriptions or features from each sensor data and fuses them to produce a fused signal for further processing. In raw data-level fusion, sensor data are integrated at the abstraction layer for better quality and low latency.

Each fusion level has its strengths and weaknesses in terms of accuracy, complexity, computational load, communication bandwidth and fusion efficiency. Commonly used fusion algorithms are statistical methods, probabilistic methods such as Kalman Filter and Particle Filter, knowledge-based theory methods, and evidence reasoning methods. Environmental perception map is built from the information coming from obstacle, road, vehicle, environment and driver. Localization is commonly performed using GNSS, IMU, cameras and LiDAR.

Emerging studies propose different approaches to avoid the need for localization and mapping stages, and sense the environment to produce an end-to-end driving decisions [10]. The three localization techniques [9] used in autonomous driving are:

• GNSS/IMU-based localization together with DGPS and RTK to ensure the continuity of GNSS signals,

• visual-based localization that includes simulataneous location and mapping (SLAM) and visual odometry, and

• map-matching-based localization that uses “a priori maps.”

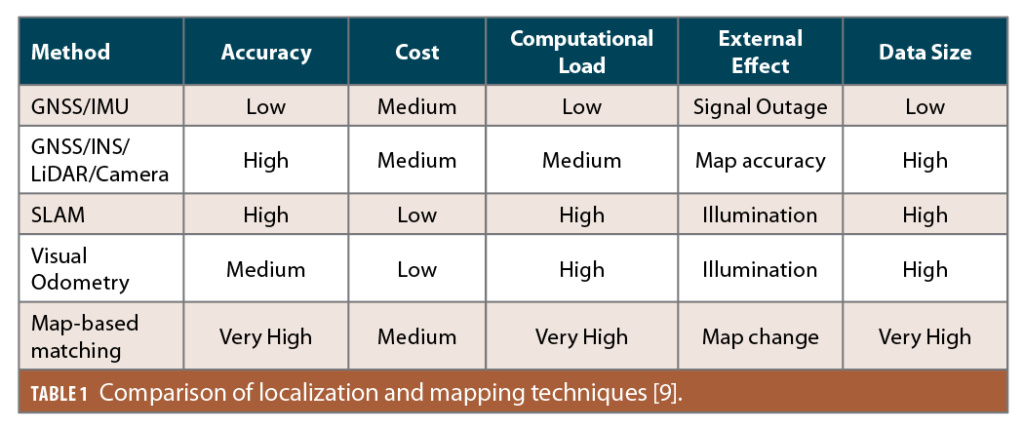

The comparison of different localization techniques on accuracy, cost, computational load, source of external effects, and the storage size of data are shown in Table 1. Studies that include GNSS/IMU as part of the localization system in autonomous driving mostly use probabilistic methods, which represent decision-level to feature-level of sensor fusion.

The following five major challenges of self-driving cars require continuing research and development effort.

• Sensors. Sensors in autonomous vehicles map the environment and feed data back to the car’s controls system to help make decisions about where to steer or when to brake. A fully autonomous vehicle needs accurate sensors to detect objects, distance, speed and so on under all conditions and environments. Lousy weathers, heavy traffic and unclear road signs can negatively impact the accuracy of the LiDAR and the camera’s sensing capability.

Another potential threat is radar interference. When on the road, a radar on a car will continuously emit radio frequency waves, which get reflected from the surrounding cars and other objects near the road. When this technology is used for hundreds of vehicles on the road, it is challenging for a car to distinguish between its own (reflected) signal and the signal (reflected or transmitted) from another vehicle. Given that there are limited radio frequencies available for radar, it is unlikely to be sufficient for all the autonomous vehicles manufactured. Although GNSS has the advantages of worldwide coverage, all-weather operation, providing absolute positions without map or road marking information, its overall accuracy as well as availability has been a concern for fully autonomous systems.

• Machine learning. Most autonomous vehicles use AI or ML to process the data from its sensors to better classify objects, detect distance and movement, and help make decisions about the next actions. It optimizes and better integrates different sensor outputs with a more complete picture. It is expected that machines will be able to perform detection and classification more efficiently than a human driver can. As of now, it is not widely accepted and agreed on that the machine learning algorithms are reliable under all conditions [11]. There is a lack of agreement across the industry on how machine learning should be trained, tested or validated.

• The open road. An autonomous vehicle continues to learn once it is on the road. It detects objects that have not come across in its training and updates the software. We need a mechanism or an agreement from the industry to ensure any new learning is safe.

• Regulation. Sufficient standards and regulations for a fully autonomous system do not exist. Current standards for the safety of existing vehicles assume the presence of a human driver to take over in an emergency. For autonomous vehicles, there are emerging regulations for particular functions, such as automated lane keeping systems. Without recognized regulations and standards, it’s risky to allow autonomous cars to drive on the open road.

• Social acceptability. Social acceptance is not just an issue for those willing to buy an autonomous car, but also for others sharing the road with them. The public is important factor in involving in decisions about the introduction and adoption of autonomous vehicles.

What are the emerging regulations for autonomous vehicles?

In the U.S., federal car-safety regulation is based on Federal Motor Vehicle Safety Standards (FMVSS) [12]. These regulations establish detailed performance requirements for every safety-related part of a car. Before a car can be introduced into the market, the manufacturer must certify that the vehicle is as safe as cars already on the road. Federal regulations do not say much about how companies develop and test cars before bringing them to market. The federal government is providing nonbinding guidance, an appropriate approach in this environment of uncertainty.

State regulations concerning autonomous vehicle testing on public roads and their changes in law vary widely. States such as California and New York are strict, requiring companies to apply for a license to test vehicles on the road. At the other extreme, no company needs a license to operate autonomous vehicles in Florida. As of 2021, there are 29 states plus D.C. that have passed legislation, 10 states requiring executive orders made by their governors, nine states that have laws pending or that have failed during the voting process, with the remaining states taking no action at all. [13].

The current regulatory landscape for autonomous driving is reasonably friendly to the development of the technology. States are experimenting with different levels of regulation. Competition among the states will allow auto manufacturers to locate to the state that best suits their experimental program. The competition and experimentation across states would help and encourage the best approach to regulation to emerge over time.

Internationally, 60 countries have reached a milestone in mobility with the adoption of a United Nations regulation that will allow for the safe introduction of automated vehicles in certain traffic environments [14]. The UN regulation, Level 3 automation, establishes strict requirements for Automated Lane Keeping Systems (ALKS). ALKS can be activated under certain conditions on roads where pedestrians and cyclists are prohibited and are equipped with a physical separation that divides the traffic moving in opposite directions. The speed limit of ALKS systems is 60 km/h. The regulation includes the obligation for car manufacturers to introduce Driver Availability Recognition Systems to detect and control the driver’s presence, and to equip the vehicle with Data Storage System for Automated Driving (DSSAD) to record when ALKS is activated.

How are automated and autonomous vehicles being accepted by the public?

Fully autonomous vehicles have the potential to enhance safety by nearly eliminating human-related elements and errors that affect drivers performance, such as aging, disease, stress, fatigue, inexperience or drug abuse. However, there are several individual and social concerns with regard to autonomous vehicle deployment: the high costs of maintenance expenses, a possible rise in fuel consumption and carbon dioxide emissions from increased travel demand, legal and ethical issues relating to the protection of users and pedestrians, privacy concerns and the potential for hacking, and loss of jobs for alternative transportation providers.

It is argued that the biggest barrier to widespread adoption of autonomous driving is psychological, not technical [11]. User acceptance of autonomous driving is essential for autonomous driving to become a realistic part of future transportation. The definition of user acceptance is not standardized as there are many different approaches to determine and model user willingness to accept autonomous vehicles.

Knowledge of public acceptance of autonomous driving is limited; more research is required to understand the psychological determinants of user acceptance. Influencing factors may include trust of accurate autonomous technology, personal innovativeness, a degree of anxiety that might be caused by relinquishing control of driving, privacy concerns related to individual location data, and the high cost of precise sensor systems for wireless networks, navigation systems, automated controls and system integration [12, 15, 16].

References

(1) ABI Research. “ABI Research forecasts 8 million vehicles to ship with SAE Leve 3, 4 & 5 autonomous technology in 2025.” www.abiresearch.com

(2) U.S. Department of Transportation. “Federal automated vehicle policy—Accelerating the next revolution in roadway safety.” www.transportation.gov.

(3) N. Joubert, T. Reid, and F. Noble, “A survey of developments in modern GNSS and its role in autonomous vehicles.” www.swiftnav.com. 2020.

(4) P. Ross, “The Audi A8: the world’s first production car to achieve Level 3 autonomy.” https://spectrum.ieee.org/. Jul. 2017.

(5) “Mercedes-Benz becomes world’s first to get Level 3 autonomous driving approval.” https://auto.hindustantimes.com/. Dec. 2021.

(6) J. Gesley, “German: Road traffic act amendment allows driverless vehicles on public roads.” www.loc.gov. Jul. 2021.

(7) “Daimler truck to build scalable truck platform for autonomous driving.” www.fleetowner.com. Dec. 2021.

(8) Ashurst. “How Germany is leading the way in preparing for driverless cars.” Aug. 2021.

(1) J. Fayyad el al, “Deep learning sensor fusion for autonomous vehicle perception and localization: A review.” Sensors. Jul. 2020.

(10) C. Chen et al., “DeepDriving: Learning affordance for direct perception in autonomous driving.” Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile. 7–13 December 2015.

(11) L. Hsu, “What are the roles of artificial intelligence and machine learning in GNSS positioning.” Inside GNSS/GNSS Solutions. Nov./Dec. 2020.

(12) National Highway Traffic Safety Administration. “Preliminary statement of policy concerning automated vehicles.” Feb. 2022.

(13) National Conference of State Legislatures. “Autonomous vehicles | self-driving vehicles enacted legislation.” www.ncsl.org. Feb. 2020.

(14) The United Nations Economic Commission for Europe. “Uniform provisions concerning the approval of vehicles with regard to automated lane keeping systems.” https://unece.org/. Mar. 2020.

(15) A. Shariff et al., “Psychological roadblocks to the adoption of self-driving vehicles.” Nature Human Behavior. 2017.

(16) S. Nordhoff et al., “Acceptance of driverless vehicles: Results from a large cross-national questionnaire study.” Journal of Advanced Transportation. 2018.