Beyond the mapless versus HD maps debate.

In the automotive industry, the conversation around mapping often swings between two extremes. On one side is the vision of “mapless” ADAS and autonomy, where vehicles perceive the road like humans do—relying on cameras and onboard intelligence, without depending on pre-built maps. On the other is the legacy of HD maps: LiDAR-heavy, survey-fleet-built, and GPU-processed datasets that promise lane-level precision but come with massive cost, complexity and scalability challenges.

The reality is more nuanced. No vehicle can safely operate without contextual knowledge of both its location and its environment. A truly “mapless” modern vehicle is a paradox: Perception-only systems struggle in poor visibility, featureless environments, complex intersections, or when anticipating what lies beyond the sensor horizon. And in practice, map data is always present, at minimum, for routing and navigation. At the other extreme, HD maps are too heavy and expensive to build and maintain at global scale.

The future lies in between. Vehicles need augmented SD maps: lightweight, continuously refreshed, and anchored to a global reference frame. By combining perception sensors with precise GNSS, automakers, fleets and dashcam companies can build maps that are streamlined, scalable and cost-effective without the burden of HD pipelines or the blind spots of perception-only approaches. And for fleets, where the American Transportation Research Institute (ATRI) reports non-fuel costs reaching a record $1.779 per mile in 2024, the need for efficiency and scalability is no longer optional: It is existential.

Ground Truth at Global Scale

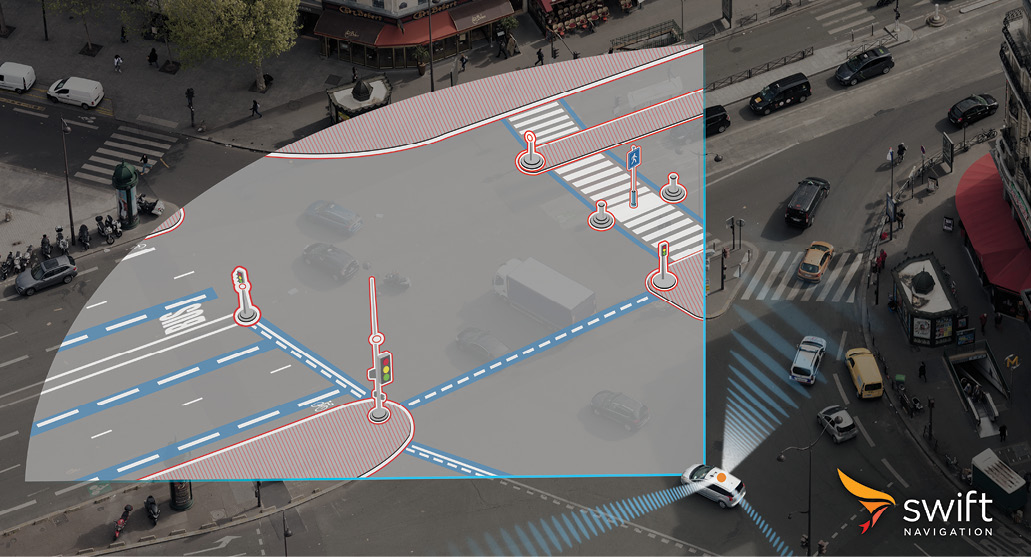

A forward-facing camera can capture features like lane markings, poles, traffic lights and barriers. But without absolute positioning, those detections exist only in a local frame—useful to the vehicle that saw them, but difficult to merge across vehicles or over time.

Precise GNSS provides the missing global anchor.

• Centimeter-level accuracy ensures each observed feature is placed in a consistent global reference frame.

• Drift-free positioning enables features to line up cleanly across days, vehicles and geographies.

• Collaborative mapping becomes straightforward, because all participants share the same reference frame.

Unlike purely perception-based SLAM approaches, which drift over time and require complex offline stitching, precise GNSS allows every vehicle to contribute globally consistent map updates in real time. For OEMs and Tier 1 suppliers, this creates a foundation for safer, lane-level ADAS and autonomy. For fleets and dashcam networks, it provides the spatial intelligence needed to optimize operations, cut costs, and scale mapping without specialized vehicles.

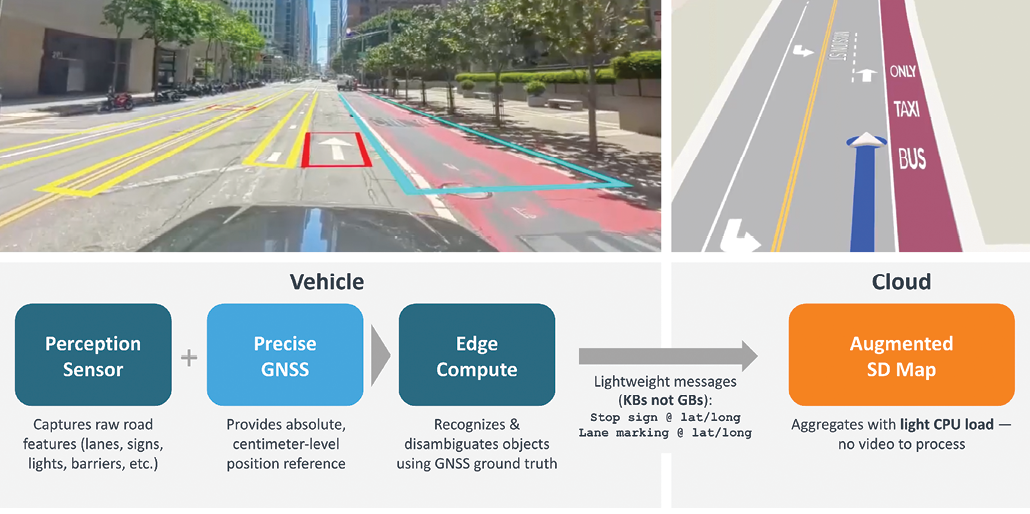

From Raw Video to Lightweight Objects at the Edge

Traditional mapping pipelines depend on massive sensor data. LiDAR scans or raw camera footage are streamed to the cloud, where feature extraction and map stitching occur. This creates huge bottlenecks: gigabytes per vehicle per hour of bandwidth and GPU-heavy central compute clusters.

With precise GNSS, the workflow shifts. Vehicles no longer need to send raw video. Instead, they can detect and disambiguate objects locally at the edge, then transmit compact geo-referenced messages upstream.

This change is transformative:

• Bandwidth savings: kilobytes instead of gigabytes.

• Cloud efficiency: lightweight aggregation instead of heavy GPU vision pipelines.

• Near real-time updates: changes flow quickly into the map with only a few passes required and without weeks of offline processing.

For fleets, this efficiency translates directly into operational savings. Compact map outputs integrate seamlessly with existing telematics workflows, eliminate the need for specialized mapping hardware, and allow operators to own more of the stack rather than paying escalating licensing fees.

Disambiguation Made Possible

One of the hardest challenges in map maintenance is deciding whether a detected object is new, removed or just jittered. With meter-level positioning, a sign might appear meters apart between passes, making reliable disambiguation impossible.

Precise GNSS removes the ambiguity. With centimeter-level stability:

• A missing feature at known coordinates indicates removal.

• A new feature at an unoccupied location indicates addition.

• Repeated observations within centimeters indicate persistence of the same object.

This accuracy reduces false positives and redundant annotations. More importantly, it allows real-time edge disambiguation, shrinking the role of the backend to simple aggregation. For fleets, that means fewer wasted updates, fewer mapping errors, and more actionable intelligence, like knowing when a new construction zone or a missing stop sign actually changes the road environment.

Resilient Across Environments

Precise GNSS removes the ambiguity.

With centimeter-level stability:

• Corrections mitigate multipath in urban canyons and foliage.

• IMU bridging keeps continuity in tunnels and brief outages until GNSS resumes.

• Heading and position remain accurate in complex topography, ensuring proper lane association.

This resilience ensures every frame of camera data is anchored precisely, enabling lane-level association and the detection of small but critical features like crosswalks or speed limit signs. For ADAS, this reliability underpins safety; for fleets, it ensures maps remain trustworthy across the full range of environments they encounter daily.

From Mapping Fleets to Fleets-at-Scale

Legacy map providers relied on specialized survey vehicles outfitted with LiDAR, high-grade GNSS, and large storage systems. The cost per mile was immense, and refresh cycles spanned months or even years. That model cannot scale.

With cameras and precise GNSS, any vehicle can become a mapping vehicle. Delivery vans, ride-hailing cars, long-haul trucks, or consumer vehicles can all contribute lightweight, high-quality map updates, and maps can be refreshed daily or even hourly instead of annually.

What makes this shift compelling is not only the technical simplicity but the economics. Traditional LiDAR and video pipelines generate gigabytes of data per vehicle per hour and require GPU-heavy reconstruction in the cloud. GNSS-anchored detections reduce the payload to kilobytes and can be aggregated with light CPU, enabling national or continental coverage without ballooning infrastructure costs.

For fleets, the payoff is immediate. Augmented SD maps help avoid repairs that averaged $0.202 per mile in 2023, cut fuel expenses that made up about 21% of operating costs in 2024, and improve last-mile delivery performance. For OEMs and Tier 1s, the same approach offers a cost-effective way to extend ADAS and autonomy features across entire portfolios without depending on LiDAR-heavy HD maps.

Powered By Skylark™

This model is now being tested across several correction-network architectures— including Swift’s Skylark—which demonstrates what continental-scale mapping looks like when corrections become a utility layer rather than proprietary infrastructure.

Skylark delivers real-time, centimeter-level accuracy via RTK and PPP-RTK, with continental-scale coverage supporting millions of vehicles simultaneously. It is already trusted by more than 20 leading automotive OEMs and Tier 1 suppliers, proving its readiness for safety-critical ADAS and autonomy.

The breakthrough lies in both scalability and openness. Skylark is built to scale globally without localized base-station buildouts, and it is receiver-agnostic, working across a wide range of automotive and fleet GNSS chipsets. This ensures flexibility for OEMs, Tier 1s and fleets, eliminating lock-in to proprietary hardware or region-specific infrastructure.

With Skylark, precise GNSS is no longer a tool for specialists but a platform for industries—enabling cost-effective, high-integrity mapping and positioning at global scale.

Augmented SD Maps Without the Overhead of HD

The automotive industry does not need “mapless” systems nor costly, LiDAR-heavy HD maps. What it needs are augmented SD maps: lightweight, continuously refreshed, and anchored in a global reference frame.

Precise GNSS makes this possible. By enabling vehicles to capture and disambiguate map features at the edge, it reduces bandwidth, shrinks backend compute, and leverages fleets already on the road.

For OEMs, this approach offers a scalable path to deploy ADAS and autonomy across entire portfolios. For fleet operators, it means optimizing operations with up-to-date maps of the environments they traverse daily. For dashcam innovators, it unlocks global-scale, community-driven mapping.

The future of mapping is not about massive data warehouses or specialized survey vehicles. It’s about augmented SD maps, built efficiently and scalably, from any vehicle on the road, powered by precise GNSS. And as AI vision systems advance, these same building blocks will enable digital twins of road networks: live, constantly refreshed replicas that anticipate traffic patterns, construction and hazards before they impact drivers. For both OEMs and fleets, this represents the ultimate extension of precise GNSS mapping—moving from reactive updates to predictive intelligences.

To learn more about Skylark™, visit: https://www.swiftnav.com/get-started