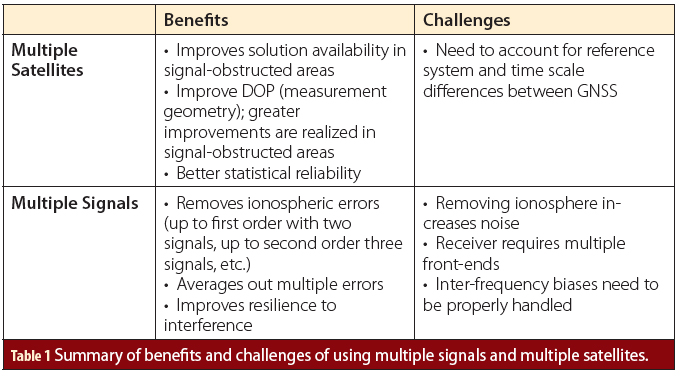

The industry is innovating in GNSS, IMU and HD mapping technology, but more work is needed to develop higher performance, lower cost applications that enable instant, extremely accurate positioning at the centimeter level or better for lane-level navigation.

Advanced driving assistance systems (ADASs) and higher-level autonomous vehicle (AV) technology will require a fusion of data from an increasingly sophisticated set of perception sensors as well as absolute position information for safe operation. While improvements to perception sensors such as LiDAR, camera and radar have received a lion’s share of the recent media focus, technology for more precise positioning from GNSS, inertial measurement units (IMU) and high-definition (HD) map modules is a crucial element in enabling better and higher levels of autonomy.

Analyst firm Strategy Analytics expects demand for automotive-grade location services to increase with the penetration of embedded navigation in mass market vehicles, growth in the ownership of electrified vehicles, mandates for ADAS including upcoming European Intelligent Speed Assistance regulations, and the emergence of automated driving capabilities.

While many vehicles already depend on GNSS positioning for navigation, functionality can be compromised when the satellite signal is unclear. In urban canyons where tall buildings obscure the signal, for example, it can be inaccurate or slow to reach the vehicle. Other areas might not have much reception at all or can be affected by factors such as bad weather.

As advanced driver assistance systems continue to evolve, these positioning challenges must be overcome. Eventually, for even higher levels of automated driving systems to safely navigate their way on highways and around obstacles, they will need instant, extremely accurate positioning—at the centimeter level or better for lane-level navigation.

Setting the GNSS Standard

Much of the positioning industry’s efforts are aimed at improving the accuracy of a standard GNSS signal. A margin of error of up to 25 ft (762 cm) is not suitable for vehicles that require more precise absolute information to maintain lane-level positioning.

A prime example of this effort to achieve better precision is Trimble’s pioneering work with General Motors. In 2017, the two companies began a collaboration to develop a reliable way to maintain in-lane positioning for GM’s Super Cruise, the world’s first true hands-free driver assistance system, with Trimble RTX, which is said to be the first precise-positioning correction service to log significant miles in a commercial autonomy driving system. The collaboration reached a significant milestone in October, marking 5 years and more than 34 million miles driven with Super Cruise engaged on GM vehicles.

The ADAS application uses Trimble RTX (Real-Time eXtended) technology to deliver high-GNSS accuracy corrections starting with the 2018 Cadillac CT6. The technology, capable of accuracy better than 3.8 cm, enables a vehicle to maintain its lane position in a variety of environments including inclement weather such as rain, snow and fog. Such conditions often challenge other sensors in a vehicle’s ADAS/AV technology stack.

“Trimble RTX has been in commercial use for more than 10 years and in 2018 was the first precise point positioning correction service to log miles in a commercial autonomous driving system,” said Patricia Boothe, Trimble’s senior vice president of autonomy.

Trimble’s RTX technology removes errors in GNSS satellite data broadcasts to improve location accuracy on roadways.

“Super Cruise is a life-changing technology, allowing customers to experience hands-free driving on compatible, mapped roads nationwide,” GM Chief Engineer, Super Cruise Mario Maiorana said.

Inertial Measurement Unit Innovations

Another key sensing component for automated driving is the IMU, which measures force, angular rate, and sometimes the orientation of an object using a combination of accelerometers, gyroscopes, and sometimes magnetometers. It plays an essential role in the sensor fusion process by adding an extra level of redundancy.

A notable example of how this technology can benefit automated driving is the recent collaboration of Panasonic Industry and Finland-based Sensible 4 Oy on a new IMU. The partners established a joint test project leveraging Sensible 4’s autonomous driving software and Panasonic Industry’s IMU.

“The IMU is employed to rectify the point cloud and thus impacts the accuracy of LiDAR data,” Sensible 4 Marketing Director Fredrik Forssell said. “Our software is expected to be ideally flexible and reliable to next-gen sensor hardware.”

The partners are conducting in-depth testing with the six-axis sensor from Panasonic Industry. Specialists from both companies are involved in an extensive evaluation period with plenty of tests and analyses. According to Munich-based Panasonic, an average lateral error of 7.9 cm in testing matched Sensible 4 requirements for Level 4 automated driving.

“This project is a special one to all of us,” said Ryosuke Toda, who’s with product marketing at Panasonic Industry. “We are deeply interested in sending out our new sensor to this freezing baptism by fire to Finland and learn more on its readiness to contribute to that new level of mobility that we all are eagerly waiting for.”

Sensible 4 believes it has solved a major obstacle in autonomous driving—changing weather. Its technology combines software and information from several sensors, enabling vehicles to operate in weather conditions including snow and fog. In August, the company released its first autonomous driving software platform called DAWN.

“The ability to conquer new ground stems from DAWN’s features,” Sensible 4 CEO Harri Santamala said. “It is capable of operating in all weather, in darkness, without the need for lane markings, and in the presence of ever-changing environmental obstacles and surroundings.”

IMUs for the Mass Market

Tewksbury, Mass., based ACEINNA is looking to take precise positioning to the next level with MEMS-based, open-source, inertial sensing systems that offer higher accuracy at a lower cost—enabling easy-to-use, centimeter-accurate navigation systems. Its sensor product family is based on anisotropic magneto resistive (AMR) technology that enables industry-leading accuracy, bandwidth and step response in a cost-effective single-chip form factor.

At the 2022 CES in Las Vegas, the company announced its INS401 high-performance inertial navigation system (INS) with an RTK-enabled dual-frequency GNSS receiver, triple-redundant inertial sensors, and positioning engine.

“The INS401 is our next step forward, delivering complex INS/RTK technology to mass markets with turnkey products,” said Wade Appelman, president and COO at ACEINNA. “Highly accurate INS solutions like these usually run 10 grand or more; we have sliced that to under $500.”

The new offering is designed for use in Level 2+ and higher ADAS and other high-volume applications. The INS401 provides centimeter-level accuracy, enhanced reliability and optimal performance during GNSS outages. The dead reckoning solution delivers strong performance in GNSS challenged urban environments.

Photo courtesy of ACEINNA.

“We are now extending our proven solutions to the entire range of autonomous vehicle applications, from SAE Level 2 to Level 5, and bringing complex INS/RTK technology to mass markets with our turnkey products starting with the INS401,” ACEINNA Vice President of Marketing Teoman Ustun said.

The INS401 is specifically developed for automotive applications using automotive-qualified components and is certified to the ASIL-B level of ISO 26262. It is small, compact and turnkey with a rugged aluminum housing and an included “Integrity Engine” that is said to guarantee zero performance failure. The triple-redundant IMU has 80-channel tracking and algorithms to enable position accuracy of just 2 cm at real-time kinematic (RTK) levels.

Photo courtesy of Sensible 4.

Mapping out HD GNSS

The team at mapping company Here Technologies thinks it has the ultimate solution for positioning in urban canyons, in bad weather, and in areas that might not have much GNSS reception at all. Calling upon its experience in mapping, its HD GNSS Positioning offering combines the use of satellite GNSS signals with visual information from sensors and digital maps via an electronic horizon to help position vehicles accurately no matter the environment.

“From safer driving to smarter trip planning, having precise positioning will redefine how we move in the future and take vehicles to the next level,” Here Technologies Senior Product Manager Tatiana Vyunova said during a webinar on mastering precise vehicle positioning for autonomous driving applications.

With automotive and enterprise-grade receivers, Here Technologies’ solution can achieve accuracy to within 10-20 cm globally. It works with any GNSS receiver, requires no additional hardware, is easy to use, and can be combined with Here Lanes and Live Maps. If lost on a multi-lane highway or faced with an obstacle, the combined services can safely reroute a vehicle to its destination—getting information to the vehicle in less than a second.

Equipped vehicles with Level 1 and 2 automated driving systems can be guided into the right lane. For Level 2+ systems, HD GNSS Positioning enables true hands-free driving, according to the company.

Fleets also can benefit from the HD GNSS solution. Key use cases for the more precise technology include off-street locations such as logistics centers, truck and van automated deliveries, and emergency response vehicles.

HD GNSS Positioning is just one of the latest innovations from Here Technologies, which has been recognized as the top ranked location platform by industry analysts at Strategy Analytics. The analyst firm noted that Here HD Live Map is integrated into the Mercedes-Benz Drive Pilot System, the first commercially available SAE Level-3 capable autonomous vehicles in the world.

Photo courtesy of Nvidia.

Putting it all Together with Map Simulation

One of the major silicon and AI companies also has waded into the localization space with a multimodal mapping engine aimed at accelerating deployment of Level 3 and Level 4 autonomy.

At its GTC 2022 event in March, Nvidia launched a mapping platform that combines ground-truth and fleet-sourced map engines to achieve accuracy and scale. A part of its Drive end-to-end AV development environment, it serves as a foundational dataset for labeling, training, validation and 3D environment reconstruction for simulation.

At his keynote, Nvidia Founder and CEO Jensen Huang introduced Nvidia Drive Map, which combines the accuracy of DeepMap survey mapping with the freshness and scale of AI-based crowdsourced mapping. The company expects Drive Map to provide survey-level ground-truth mapping coverage to 500,000 km of roadway in North America, Europe and Asia by 2024.

Drive Map contains multiple localization layers of data from camera, radar and LiDAR sensors so that an AI driver can localize to each layer of the map independently, providing the diversity and redundancy required for the highest levels of autonomy.

The camera localization layer consists of map attributes such as lane dividers, road markings, road boundaries, traffic lights, signs and poles.

The radar localization layer is an aggregate point cloud of radar returns, which helps in poor lighting and poor weather conditions. Radar localization is also useful in suburban areas where typical map attributes aren’t available, enabling the AI driver to localize based on surrounding objects that generate a radar return.

The LiDAR voxel layer provides the most precise and reliable representation of the environment. It builds a 3D representation of the world at 5 cm resolution, accuracy impossible to achieve with cameras and radar.

The AI-based crowdsource engine gathers map updates from millions of cars, constantly uploading new data to the cloud as vehicles drive. The data is then aggregated, loaded to Nvidia Omniverse cloud, and used to update the map, providing fresh real-world over-the-air map updates within hours.

Omniverse maintains an Earth-scale representation of the digital twin that is continuously updated and expanded by survey map vehicles and millions of passenger vehicles. Using automated content generation tools built on Omniverse, the detailed map is converted into a drivable simulation environment that can be used with Nvidia’s Drive Sim.

“Drive Map and Drive Sim, with AI breakthroughs by Nvidia research,” Huang said, “showcase the power of Omniverse digital twins to advance the development of autonomous vehicles.”