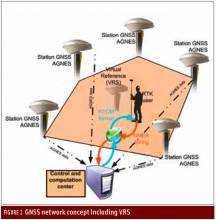

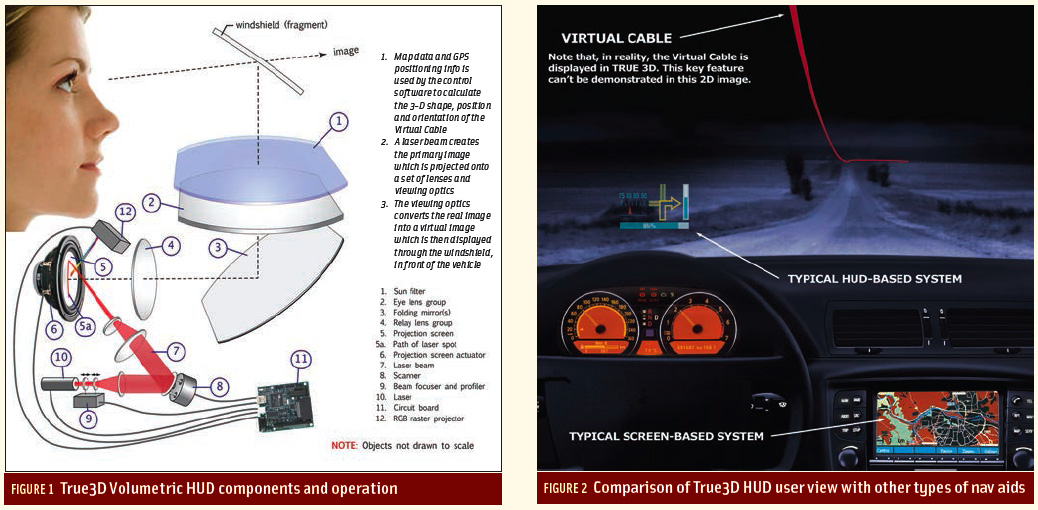

FIGURES 1 & 2: True3D Volumetric HUD components and operation (left), Comparison of True3D HUD user view with other types of nav aids (right)

FIGURES 1 & 2: True3D Volumetric HUD components and operation (left), Comparison of True3D HUD user view with other types of nav aids (right)Return to main article: "True3D HUD Wins Global SatNav Competition"

Many technologies are created before their best applications are even thought about. This leads to a business phenomenon known as “technology push” in contrast to “consumer pull.” The True3D Volumetric HUD technology did not share this path.

Return to main article: "True3D HUD Wins Global SatNav Competition"

Many technologies are created before their best applications are even thought about. This leads to a business phenomenon known as “technology push” in contrast to “consumer pull.” The True3D Volumetric HUD technology did not share this path.

Aware of the lifestyle-changing potential of GPS navigation technology and at the same time frustrated with the clunky and distracting designs of screen-based navigation systems, Chris Grabowski and Tom Zamojdo challenged themselves with trying to envision how navigation systems could look in a distant future, without limits of any technologies existing today.

First came the idea of a giant mirror high in the sky, where the driver could see the reflection of roads ahead of the car. The correct route would be marked on the mirror, and the driver could follow it by correlating the mirror image with the real road.

As it turned out, in this case the technology needed to implement this idea already existed in a form of a head up display (HUD) used primarily in military aircraft. In 1998, Grabowski and Zamojdo applied for a U.S. patent on this idea of a reflective approach to an image in a HUD (number US6272431 issued in 2001), but at the same time they did not think this was the breakthrough they were looking for.

That came when the two men realized that the road network map shown in the mirror could be reduced to a single line, but only if such a line was displayed in three dimensions. The 3D depth cues would allow the driver to easily correlate the line with the road and turns ahead.

Unlike the other navigation systems, this method eliminated the need to identify or count cross-streets and other objects along the route in order to know where to go next. Just follow the line you see suspended over the road. Thus, the trademarked idea of the Virtual Cable was born.

But what if following the Virtual Cable would have some undesirable effects on the driver? Would it distract a driver’s attention? Could it be annoying? Would it require a lot of attention to follow correctly?

Grabowski and Zamojdo realized that the new interface would require extensive testing. But how does one test an idea without having the technology to implement it? As it turned out there was a way.

“Where Little Cable Cars . . .”

Living in New Jersey at the time, Grabowski remembered seeing pictures of San Francisco with overhead trolley cables hanging above the streets throughout the city. Only, instead of seeing lines bringing in power to the municipal streetcars and electric buses hooked up to them, the two engineers saw directional cues to drivers.

So, Grabowski and Zamojdo built a plan for field trials based on the idea of following the overhead trolley powerlines through the hilly West Coast city, driving — for hours at a time — along various routes using the cables as guides.

And it all worked as they had hoped.

Following the trolley cables above the road turned out to be much easier than following cable car tracks on the road, because the cable’s shape and direction could be seen considerably farther ahead. As an added benefit, the cable did not obstruct any objects on the road. Seeing the cable with peripheral vision was sufficient (just like seeing road curbs out of the corner of one’s eyes) so that a driver could keep eyes on the road and hands on the wheel at all times.

The hundreds of people who, years later, drove test cars with a working Virtual Cable display have confirmed this all over again.

Grabowski and Zamojdo felt that they had successfully answered their initial challenge of creating a non-distracting navigation guide, but this only created another one. Can such interface be realized using any existing technology?

At first, the only solution that came to mind involved technology would cost more than $20,000 per unit, like using acousto-optic (AO) crystals for high speed optical scanning, a non-starter in the automotive space. But a couple of years later, in 2003, a combination of persistence and luck paid off, and their novel True3D Volumetric HUD technology was born.

How Does It Work?

True3D image is a single three-dimensional (“volumetric”) optical image, not a stereo pair of 2D (flat) images, as is the case in 3D TV or 3D movies. Because of this, no special eyewear or head-tracking cameras are required for the image to be seen in 3D.

The Making Virtual Solid -California (MVSC) HUD design stems from the same optical principles that allow people to see their own reflection in a mirror as a true-3D image – which appears to be behind the mirror. Such 3D images inherently present the viewer with all the depth cues of natural objects, including perspective, stereopsis, eye-focus, motion parallax, optic flow, convergence, and shading.

The true-3D HUD provides generous field of view, more than 20 degrees of angle in the horizontal direction and, depending on the dashboard geometry, that much or nearly that much in the vertical. This is perfectly adequate for car navigation and provides a generous-sized head motion box or eyebox — a 3D area where the driver’s eyes need to be positioned for the HUD images to be viewed. This enables drivers to move their heads naturally without losing sight of the image.

Data from MEMS gyroscopes allows generation of an image that is stabilized relative to the landscape; so, bounces of the car do not affect it. The display can place any part of a generated image at the distance where it needs to appear at the moment, from a few yards to a mile.

Drawing on NASA HUD usability and safety studies for reference, the volumetric technology was chosen not just to eliminate any need for eyewear or head tracking cameras. Grabowski and Zamojdo also wanted to provide a much more precise, more convincing 3D effect than stereoscopic technology using a stereo pair of 2D images could accomplish.

The novel feature is a new “swept volume” type of volumetric display that incorporates a small internal vibrating projection screen, vector graphic laser projector, and high-magnification HUD optics.

A key contributor to the ongoing effort in optics design in MVSC is Dave Kessler, who spent 24 years managing the advanced optical design group at Kodak Research Labs. Kessler has filed for a patent on a “pupil-expanded volumetric display,” which may become part of the planned MVSC system design.

While volumetric displays are generally hobbled by their huge data and component bandwidth requirements, MVSC’s HUD leverages the unique geometric simplicity of the Virtual Cable symbology to accomplish great performance with remarkably low-cost hardware. At its core is a 3D vector graphic display engine that uses a small, DVD-writer–class laser diode and a few very low-tech and low bandwidth electromechanical actuators — magnets, coils, and springs. (See Figure 1, above.)

But these low-tech components are driven by a high-tech brain. The display engine is able to paint the Virtual Cable image 60 times per second with high-enough quality by employing sophisticated microprocessor control of all these components, including the previously mentioned vibrating screen.

This screen looks like a small acoustic speaker with white a ceramic concave dome, but its vibrations are under precise closed-loop control and constantly being adjusted according to the content of the displayed image.

The 3D engine can produce images that are high-resolution and bright enough to be visible against a sunny sky.

Just How Different Is This HUD?

Unlike screen-based navigation systems, with an HUD system the driver does not need to look away from the road to view a screen. And unlike other HUDs, the True3D system places images with exquisite accuracy (often within less than one meter of the target location) into a driver or pilot’s landscape view (above or below the horizon, overlaid to GPS locations or projected independently of “real” objects in the forward field of vision).

Rather than the fixed image distance of two-three meters typical of HUDs, the True3D images are projected as though appearing at any depth, even to infinity. Images can be shown near or far, over a wide field of view using regular windshield glass with no special coating. (See Figure 2, above.) The system provides depth cues, all of them correct and in complete agreement with each other, just like those of real objects; for example, normal head movements allow the driver to see the imaged object from either side.

These features of the True3D HUD are key and differentiating, according to MVSC. HUD systems developed by others are severely limited by the depth cues they can display, and typically require head-tracking or eye-tracking cameras to create the images aligned with the objects outside.

The system can generate still and motion graphics, re-cast aerial imagery for use by ground-level units, or place a “guide wire” navigational line ahead of drivers. Icons and images displayed by the system are designed to be shown with crisp resolution and (optionally) in full color.

Going forward, Grabowski and Zamojdo plan to simplify the hardware even further by employing MEMS scanners and focusers instead of discrete moving-iron or moving-coil actuators.