Q: How do you trust centimeter level accuracy positioning?

Q: How do you trust centimeter level accuracy positioning?

A: The GNSS Solutions article in the July/August 2014 issue of Inside GNSS discussed how measurement errors propagate into GNSS position estimates. The article used the standard positioning algorithm (pseudorange-based single-receiver positioning) as an example to explain the propagation of errors. Following on the previous article, we will answer two related questions: How is high accuracy positioning determined, and when can we trust it?

The previous article concludes that the primary factors that affect the accuracy of position estimates are measurement geometry and measurement accuracy. The former is determined by the relative positions of a user receiver and the visible satellites.

Many users have little flexibility to improve the geometry for given constellations. Some special users — such as those benefiting from the deployment of pseudolites, for example — can improve geometry. Aside from such exceptions, however, the main way of improving positioning accuracy is to mitigate measurement errors as much as possible.

GNSS positioning requires a user receiver to demodulate navigation signals and derive the ranges (distances) between the user antennas and the visible satellites. The range could be derived from the code phase or carrier phase measurements. The former are used to generate pseudoranges, which are used as the basic positioning measurement for many services including mass-market applications where meter level accuracy is required.

For those requiring very high (centimeter/millimeter–level) accuracy), the usual approach is to use the carrier phase instead of the code phase and to mitigate the relevant errors, including satellite ephemeris error, atmospheric effects, environmental effects, receiver-related errors, and measurement noise. The various errors have different characteristics, such as spatial-temporal correlation and frequency dependence, which can be used to mitigate the errors employing such techniques as the use of data from reference stations for modeling, or generating a linear combination of measurements at different frequencies (e.g., widelaning).

Carrier Phase–Based Positioning

For high accuracy positioning with GNSS, carrier phase measurements are required because of their low phase noise and the low level of multipath errors (compared to the code phase). However, as is well documented and understood in the technical literature, the challenge is determine the whole number of carrier cycles (integer ambiguity) when the receiver locks on to a satellite signal.

In order to have a good chance to correctly resolve the integer ambiguity, the residual measurement error should be less than a quarter of a wavelength. This is enabled by a mitigation strategy within, and external to, the user receiver. Two methods employ carrier phase measurements directly: the conventional real-time kinematic (cRTK) and precise point positioning (PPP).

The cRTK method needs at least one extra receiver placed at a known position (reference) in the vicinity of the user. In this positioning mode, raw measurements (carrier phase, pseudorange, and so forth) are transmitted to the user receiver.

Differencing of the raw measurements across receivers and satellites produces the double-difference observable. This observable eliminates the common errors among satellite and receiver clocks and mitigates the spatially correlated errors (e.g., satellite orbit and ionospheric errors). The amount of error mitigation depends on the level of correlation, which is a function of the distance between the receiver and the reference station.

However, cRTK comes at a cost: the noise level is amplified in the observable because measurements from multiple receivers are being combined. Despite this, the double difference results in integer double-differenced ambiguities, and their correct resolution leads to high-accuracy positioning.

Unlike cRTK, the PPP technique is designed to use carrier phase measurements from a single receiver. In this technique, the major errors (including satellite clock, orbit, and ionosphere) are estimated by a wide area network of receivers and transmitted to users in the form of products for error correction. The remaining errors such as tropospheric effects and solid Earth tides are mitigated by applying error models.

The resolution of integer ambiguities in PPP is more difficult than in cRTK due to the relatively large residual errors. Although cRTK and PPP are different in the way that they use carrier phase measurements, a common key issue is the need to resolve the integer ambiguities. The process of resolving the ambiguities is mathematically similar in both cases.

MILS for Ambiguity Resolution

In standard positioning, the four unknowns are all real values. A straightforward least squares method can be used to estimate these unknowns. However, when integer ambiguities are involved, the unknowns include the mixture of real and integer values.

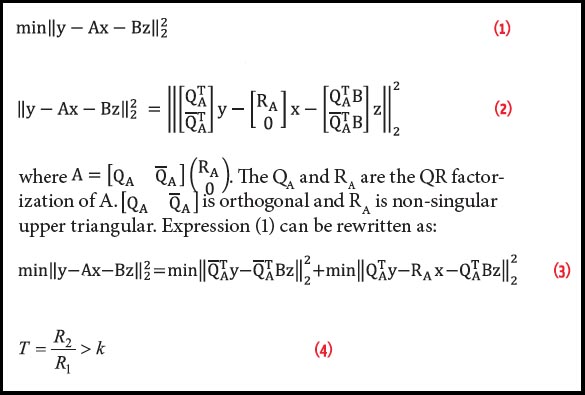

The mixed integer least squares (MILS) problem can be expressed as:

Equation (1) (see inset photo, above right, for all equations)

where y ∈ Rm is the vector of observations; x ∈ Rk is the vector of unknowns to be estimated, excluding the ambiguities; z ∈ Zk are the integer ambiguities; and, Α ∈ Rm×k and Β ∈ Rm×n are coefficient matrices.

Typically, MILS is translated to an integer least squares (ILS) problem and a real least squares (RLS) problem by orthogonal transformations, thus:

Equation (2)

Equation (3)

The first part of (3) is an ILS problem. As long as the z in the first part is solved, the second part becomes an RLS, which is easily solved. The resolution of the ILS part is not straightforward. It normally involves two steps: (1) treating ILS as RLS to get float (real) solutions, and (2) searching all possible integers around the float solutions to find the integer. The second step requires a transformation to reduce the search space for integer solutions. The well-known least-squares ambiguity decorrelation adjustment (LAMBDA) is a typical MILS method used in GNSS positioning.

The Trust Issue

If the ambiguities are resolved correctly, we can expect the positioning accuracy to be at the centimeter level. However, how can these solutions be trusted, and what is the relevant “trust” or “quality” indicator?

Conventionally, we can incorporate code phase or pseudorange-based receiver autonomous integrity monitoring (PRAIM) into standard positioning algorithms to ensure that a level of trust (integrity) can be placed on the solution. PRAIM involves two processes: detection of a potential failure and derivation of the protection level. The former requires the construction of a test statistic using the measurement residuals and the determination of a threshold that indicates the presence of a failure.

The protection level is an estimate of the upper bound of the positioning error that would result from an undetected error (that is, the protection level bounds the position error). Both horizontal and vertical protection levels are used in order to decide if an intended operation can be supported by a satellite navigation system. PRAIM has achieved a level of success in civil aviation to support operations, for example, in the en-route phase of flight.

In the case of high-accuracy positioning, during the integer ambiguity resolution process the ambiguity residuals contribute also to the measurement residuals. Therefore, a high confidence must exist in the correct resolution of the ambiguities (i.e., ambiguity validation) before checking to determine if the position estimates can be trusted (positioning stage integrity monitoring). This necessitates the development of a reliable and efficient carrier phase integer ambiguity validation technique as an integral part of carrier phase–based receiver autonomous integrity monitoring (CRAIM).

Ambiguity Validation

The validation process involves the construction of test statistics using the residuals of ambiguities or the residuals of observations, characterization of their distribution, and determination of thresholds. Example tests include ratio, F-distribution, t-distribution, and Chi-square. These methods have been shown to perform differently depending on the observations and observables.

The common defect in these methods is the assumption of independence or/and bias-free residuals. Specifically, the conventional ratio test uses as its key statistic the ratio of the sum of the squared errors (SSE) of ambiguity residuals between the second-best and best ambiguity candidates. The ratio test is therefore defined as follows:

Equation (4)

where R1 and R2 are the SSEs of the ambiguity residual for the best and second best candidate ambiguities, and k is the threshold.

The main challenge in the use of a distribution to determine the threshold are that R1 and R2 are correlated, and the potential for a bias in R1. A common compromise in this approach is to use a fixed threshold. However, this does not capture the major factors that affect the level of confidence (or success rate) associated with the resolved ambiguities. To address this, the conventional ratio test has been developed further including combining the ratio test with the integer aperture (IA) concept.

The ratio test–based integer aperture (RTIA) is a combination of Monte Carlo simulation for the ratio test with the IA method. This requires the simulation of a large number of normally distributed independent samples of float ambiguities. However, the assumption of independent normally distributed float ambiguities is difficult to justify. Furthermore, the need for significant computational resources for the online simulation of large samples (>100,000) and computation of the success/fail rates precludes the use of IA in real time.

The recently developed doubly non-central F-distribution (DNCF) method is based on the same test statistic used in the ratio test in expression (4). The DNCF distribution describes the statistic characteristics of the quotient of two independent non-central Chi distributions.

Although, the numerator (R2) and denominator (R1) are correlated as addressed earlier, the threshold determined from a DNCF distribution can effectively over-bound the ratio test statistic in terms of confidence level. Therefore, the DNCF-based method acknowledges both correlation and the existence of bias in R1. For comparison purposes, Figure 1 (at the top of this article) shows the relationship between the confidence levels (CL) determined from DNCF and the number of ambiguities (degrees of freedom or DOF) for various thresholds used in a traditional ratio test.

Figure 1 clearly indicates that the traditional fixed-threshold method cannot produce the confidence level needed. Using a fixed threshold results either in a lower confidence level or misses the opportunity to fix integer ambiguities. For example, a selection of a threshold of 2.5 results in low confidence (<0.95) candidates being chosen when the DOF is less than 7, while at high confidence (>0.99) candidates are rejected when the DOF is larger than 18.

Positioning Stage Integrity Monitoring

Although carrier phase measurements are used for high accuracy positioning, pseudorange measurements are still needed to provide constraints for position estimates. Therefore, the construction of test statistics for CRAIM need take into account the full set and subsets of measurements. For example, the full set test statistic includes all types of measurements used (e.g., pseudorange, linear phase combinations, and single frequency carrier phase measurements).

Because the carrier phase–based positioning involves single or double differencing operations, it is important that the mathematical correlation among the observables is accounted for. If any test statistic fails to pass the test (i.e., is larger than the corresponding threshold), a failure-exclusion process is needed. If failure-exclusion is unsuccessful, the position estimates cannot be trusted.

The protection levels can be calculated based on the sensitivity of residuals to the positioning error. This sensitivity for each measurement is also known as the slope. To protect users against potential failures, normally the worst-case scenario (the one with maximum slope value) is considered. Use of a Kalman filtering method allows the calculation of the protection level from the square root of covariance of the estimates (parameters) by a multiplication factor that reflects the confidence level.

Summary

For high-accuracy positioning, low noise carrier phase measurements must be used. Depending on the available resources and error-mitigation scheme, carrier phase can be used either in RTK mode (which needs at least one more receiver in a known position in the vicinity of the user) or PPP mode (which requires correction products generated from a wide area network of receivers).

In order to reach centimeter-level positioning accuracy with trust, the common challenge for both modes is the correct resolution of integer ambiguities. The correct resolution is ensured through ambiguity validation, while the trust is ensured through carrier phase–based integrity monitoring, both of which have been addressed in this article.

Additional Resources

Ambiguity Resolution

- Chang, X., and Zhou, “MILES: MATLAB Package for Solving Mixed Integer LEast Squares Problems,” GPS Solutions, (2007) 11: 289-294

- Teunissen, P., “The Least-Squares Ambiguity Decorrelation Adjustment: a Method for Fast GPS Ambiguity Estimation,” Journal of Geodesy, 70(1-2):65-82, 1995

Ambiguity Validation

- Feng S., and W. Y. Ochieng, J. Samson, M. Tossaint, M. Hernandez-Pajares, J. M. Juan, J. Sanz, À. Aragón-Àngel, P. Ramos-Bosch, and M. Jofre. “Integrity Monitoring for Carrier Phase Ambiguities,” Journal of Navigation, 65(1): 41-58, 2011

- Verhagen, S., and P. Teunissen, “The Ratio Test for Future GNSS Ambiguity Resolution, GPS Solutions, 17(4): 535-548, 2013

CRAIM

- Feng S., and W. Y. Ochieng, T Moore, C. Hill, and C. Hide C. “Carrier-Phase Based Integrity Monitoring for High Accuracy Positioning,” GPS Solutions, 13(1):13-22, 2009

NEO-M8P.jpg)