A completely GPS-based navigation solution is generally not feasible in GNSS signal–challenged environments such as urban canyons. However, even in these difficult environments a partial set of GPS signal measurements may still be available. For instance, one or two satellites are generally still visible even in dense urban canyons.

A completely GPS-based navigation solution is generally not feasible in GNSS signal–challenged environments such as urban canyons. However, even in these difficult environments a partial set of GPS signal measurements may still be available. For instance, one or two satellites are generally still visible even in dense urban canyons.

This limited GPS ranging information is insufficient for a complete three-dimensional (3D) positioning and timing. However, we can exploit it to improve the efficiency of alternative navigation aids such as vision-based navigation. Specifically, partial carrier phase measurements can be applied for accurate (centimeter-level) initialization of ranges to features that are extracted from video images.

Once feature ranges have been initialized, a 3D image-based navigation option is enabled and can be applied for accurate navigation in GPS-denied scenarios.

Vision-based navigation serves as a viable augmentation option to GPS. However, a fundamental limitation of vision-based approaches is the unknown range to features that are extracted from video images.

Stereo-vision methods can be used, but their performance is directly determined by the stereo baseline. This limits the application of stereo-vision for small platform cases, such as miniature unmanned aerial vehicles (UAVs) and hand-held navigation devices.

Depth information of monocular images can be initialized using platform motion to synthesize a baseline. In this approach, rather than observing a scene using two different cameras simultaneously (stereo-vision), the scene is observed sequentially from two different locations by the same camera (synthetic stereo-vision). If the synthetic baseline can be measured somehow, the image depth can be readily estimated in a manner similar to stereo-vision depth resolution.

As one option, we could measure the synthetic baseline using an inertial navigation system (INS). However, this approach leads to a correlation between inertial errors and range errors, which increases the drift of a vision-aided inertial system unless motion maneuvers are performed to decorrelate the errors.

Another method for measuring the synthetic baseline is to use GPS carrier phase measurements. GPS carrier phase provides relative ranging information that is accurate at a millimeter to sub-centimeter level. This accurate range information can be directly related to the change in platform location between images (i.e., to the synthetic baseline). Specifically, a projection of the position change vector onto the platform-to-satellite line-of-sight (LOS) unit vector is related to the change in the carrier phase between two images.

This method uses a temporal change in the carrier phase to eliminate the need for resolving integer ambiguities. Moreover, it does not require us to estimate position change from carrier phase changes first and then apply the delta position estimate for the range initialization.

As shown later in this article, carrier phase observables can be directly combined with vision observables for the accurate estimation of ranges to features that are observed by a monocular camera. In this case, the monocular image depth can be still resolved even if a limited number of GPS satellites (less than four) is available and position change cannot be estimated.

The original motivation for vision/GPS integration was to use carrier phase for the image depth initialization. Once the image depth is resolved by estimating ranges to vision-based features, vision-only navigation remains enabled in case that GPS becomes completely unavailable.

In addition to depth resolution, accurate estimates of delta position and orientation serve as by-products of the carrier phase GPS/vision estimation algorithm. Delta position is defined as the change in the platform position vector between consecutive measurement updates. We can apply delta position estimates to reconstruct platform trajectory for applications such as guidance and control.

Research has demonstrated that delta position can be estimated at a sub-centimeter level of accuracy under open-sky GPS conditions. (See, for example, the article by F. van Graas and A. Soloviev cited in the Additional Resources section near the end of this article.) The vision/GPS method that we present here extends accurate delta position capabilities to cases of limited GPS availability.

Our algorithm also provides estimates of the camera’s orientation, particularly, an estimate of the heading angle. The availability of estimated heading especially benefits integration with low-cost inertial applications where heading is not significantly observable except during acceleration and/or turning maneuvers.

This article focuses on use of the GPS/vision integration approach for the case of point features. However, the approach can be generalized for other feature representations (such as, for example, line features or planar surfaces).

GPS/vision estimation can operate under a very limited GPS availability. Specifically, it only requires one satellite if the receiver clock has previously been initialized and the camera’s orientation is known. Two satellites are needed for the case of a calibrated clock but unknown orientation, and three satellites are required if both clock and orientation are unknown.

In this article, we will first offer a conceptual explanation of the GPS/vision integration method followed by a complete formulation of the estimation routine. Finally, we will provide simulation results and initial field test results to validate the proposed algorithm and demonstrate its performance characteristics.

Concept

The carrier phase/vision range initialization approach is based on observing vision-based features from two different locations of the platform. The problem of using video features alone is that vision measurement observables that relate changes in feature parameters with changes in navigation parameters and unknown feature ranges are homogeneous. These homogeneous observables can only be resolved within the ambiguity of a scale-factor.

. . .

GPS/Vision Estimation Approach

This section formulates the carrier phase GPS/vision solution for the general six-degrees-of-freedom (6 DOF) motion case. First, vision observables of the estimation algorithm are formulated. Next, we provide the GPS observation equations and then describe the estimation algorithm.

Vision observables. Vision observables are formulated for the general case of multi-aperture vision. In this case, video images are recorded by multiple camera apertures. As compared to cases of single aperture, the multi-aperture formulation has a number of advantages including improved situational awareness, increased number of high-quality features, and a better feature geometry. These advantages are discussed further in the article by A. Soloviev et alia cited in Additional Resources.

. . .

Estimation procedure. The estimation procedure combines vision and GPS measurement observables that are formulated by equations (3) and (8), accordingly. Note that the position change vector in Equation (3) is resolved in the camera body frame, while the same vector in Equation (8) is resolved in the axes of navigation frame.

Test Results

This section presents simulation results and initial experimental results that validate the GPS/vision integrated solution and evaluate its performance characteristics.

Simulation results. The following simulation scenario was implemented:

• Motion scenario: straight motion with a two meter/second velocity

• GPS measurements: carrier phase with five-millimeter (one sigma) noise

• Vision: multi-aperture vision system — four cameras with mutually orthogonal axes, 40×30-degree field of view, and 640×480 resolution;

• Vision features: 10 features uniformly distributed over the multi-aperture field of view;

• Feature measurement noise: one pixel (sigma)

• Inertial: lower-cost inertial unit — 0.1 degree/second (360 degree/hour) gyro drift

• Algorithm update rate: one update per second

Note that the simulation results reported here were generated for the case of a multi-aperture camera system. However, we observed that the increased number of apertures does not have a noticeable influence on the overall system performance. Therefore, similar results can be expected for single-aperture implementation.

. . .

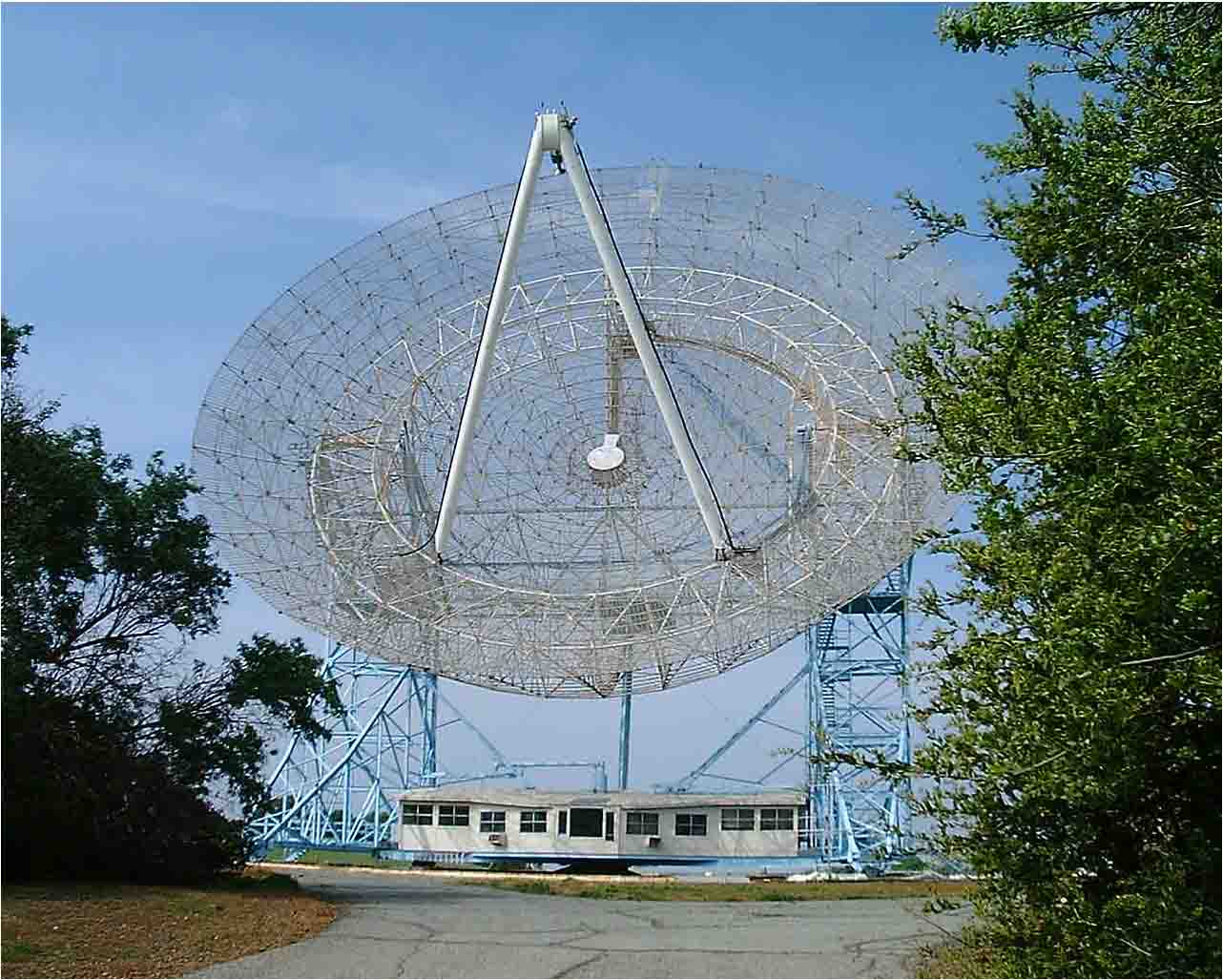

Experimental results. This setup was developed at the Advanced Navigation Technology (ANT) Center at the Air Force Institute of Technology (AFIT) and used the following equipment for the GPS/vision experiment:

• a 24-channel, dual-frequency GPS receiver

• Vision:

– one-camera system

– 45-degree horizontal field of view

– 1280×1024 resolution

– Feature measurement noise: 1 pixel (sigma)

– SURF feature extraction

• Inertial:

– Lower-cost inertial unit: 0.5 deg/s (1800 deg/hr) gyro drift

. . .

Overall, simulation results and initial test results presented in this section demonstrate the validity of the integrated GPS/vision estimation approach. The results show delta position estimation that is accurate at a centimeter to sub-decimeter level and heading estimation accuracy in a range from one to three degrees.

Conclusions

This paper proposes the integration of partial GPS measurements with vision-based features for navigation in GPS-challenged environments where a stand-alone GPS navigation solution is not feasible. The integration routine developed estimates changes in platform delta position, the platform’s heading angle and initializes ranges to features of monocular video camera. Simulation results and initial test results demonstrate the efficacy of the vision/GPS data fusion algorithm.

Future work will focus on detailed evaluations with experimental data including transitions between partial GPS and GPS-denied environments. Future work will also consider fusion of vision and GPS for the estimation of absolute position states (rather than delta position) under a limited satellite availability.

For the complete story, including figures, graphs, and images, please download the PDF of the article, above.

Acknowledgement

This article is based on a paper presented at the 2010 Position Location and Navigation Symposium (PLANS 2010), copyright IEEE. The authors would like to thank the ANT Center at AFIT for providing the data collection setup.

Additional Resources

[1] Kaplan, E., and C. Hegarty (Editors), Understanding GPS: Principles and Applications, 2nd ed., Artech House, Norwood, Massachusetts, USA, 2006

[2] Soloviev, A., “Tight Coupling of GPS, Laser Scanner, and Inertial Measurements for Navigation

[3] Soloviev, A., and J. Touma, T. J. Klausutis, A. Rutkowski, and K. Fontaine, “Integrated Multi-Aperture Sensor and Navigation Fusion,” in Proceedings of the Institute of Navigation GNSS-2009, September 2009 in Urban Environments,” Proceedings of IEEE/ION Position Location and Navigation Symposium, May 5-8, 2008, Monterey, California

[4] van Graas, F., and A. Soloviev, “Precise Velocity Estimation Using a Stand-Alone GPS Receiver,” NAVIGATION, Journal of the Institute of Navigation, Vol. 51 No. 4, 2004

[5] Veth, M. J., “Fusion of Imaging and Inertial Sensors for Navigation,” Ph.D. dissertation, Air Force Institute of Technology, September, 2006