There has been much discussion of the need for resilient PNT over the past few years as dependencies have grown and an evolving threat matrix has become more active. As a nation, we need a measured and cost-effective response commensurate with the level of threats and the possible consequences.

Logan Scott, Logan Scott Consulting

Do we even know what resilience is? Mostly it looks like extra cost when you don’t need it. Then, when it is needed and you don’t have it, it looks like failure—sort of like the Texas power grid back in February. Resilience has costs, and budgets are bounded. My working definition for resilience is that it is about building sufficiently secure and reliable systems out of insecure and unreliable components operating in an indeterminate and evolving environment.

In Richard Cook’s 3-page long masterwork “How Complex Systems Fail,” he observes: “complex systems run as broken systems. The system continues to function because it contains so many redundancies and because people can make it function, despite the presence of many flaws.” Furthermore, he notes that resilience can be improved by “establishing means for early detection of changed system performance in order to allow graceful cutbacks.” Situational awareness is the key in discovering where problems might be developing and then, taking corrective action before catastrophic failure.

How do you measure resilience? One way is to try to break it and then decide whether the protection is adequate for the domain of use. Any system will break under sufficient stress. Determining what is sufficient is a hard question but it is a key question. Resilience could end up being quantified using a series of tests like UL standards for safes. You expose the safe to a skilled safecracker and see how long it takes them to break in. Interestingly, the highest security rating, TXTL-60, only guarantees protection for 60 minutes. After that, you need another plan.

Finally, how do you maintain resilience? Again, from Richard Cook: “The state of safety in any system is always dynamic; continuous systemic change ensures that hazard and its management are constantly changing.” As systems and threats evolve, new failure modes and attack vectors develop, and so, the challenge is to respond with commensurate protections. Operator training and controlled exposure to threats is essential. Experience builds confidence and speeds reaction times.

The Need for Standards and Mandates

A core question we have not addressed at a national policy level is how to incentivize resilience. When seat belts were invented, they were made available as an option by Ford and others. It was not a popular option. Less than 2% of buyers elected to get them. Then, through a series of federal mandates, they became required equipment and later, we saw requirements to use them. The point being that safety standards are needed, they have costs, and, if they are left optional, they may not be implemented or used.

I like that DHS is addressing resiliency as a risk management question, but leaving the implementation of protections entirely up to the user community strikes me as unworkable. Our user communities are rarely aware of the potential risks and possible consequences, much less how to address them. Even when they are aware of the risks, industry is often more driven by cost considerations under nominal conditions, and they fail to prepare.

I’ll pick on the Texas power grid in February again, but I could also have picked on “just in time” manufacturing systems vulnerable to supply chain disruptions, or the pilots who let the Ever Given onto the Suez Canal. By establishing standards and exposure-based testing procedures, vendors and buyers in critical infrastructure domains can avoid the more egregious outcomes in a cost-effective manner.

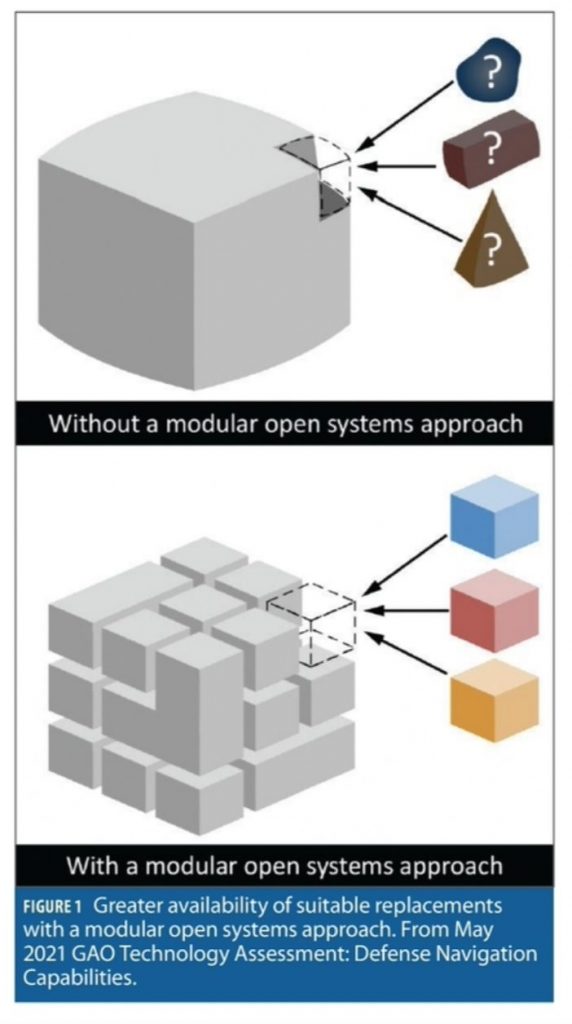

The Need for Modular Open Systems Architectures (MOSA)

In prior discussions I noted that building a resilient architecture is not just about having the right parts; they must be integrated correctly (and tested). MOSA is about effectively leveraging the capabilities of diverse system components and maintaining currency as new innovations and technologies become available. MOSA is a platform, it is an operating system, it is an enabler. It is not a point solution.

A cell phone’s positioning process is a great example of MOSA. Android phones come in diverse flavors and have a rapid innovation cycle based on a rich and constantly evolving ecosystem of parts. Yet, they all manage to integrate sensors together to establish position with good accuracy both indoors and outdoors. That said, cellphones performed abysmally when exposed to inadvertent spoofing at the ION GNSS+ conference in 2017.

In many ways, integrity and resilience are highly intertwined problems and so, there are opportunities within MOSA constructs to approach the problem of safely integrating less than 100% trusted, 100% reliable components. Adding trust modules using, for instance, Bayesian inference approaches can substantially harden systems operating with uncertainty. When you hear that rattling sound in your car, you may not know what it is, but you do know to investigate. Experience with cybersecurity shows the need for a rapid update and response cycle—MOSA will help.

The Need for Cybersecurity and Authentication

Modern PNT systems are computers, often running a full operating system. For good or ill, they will almost certainly connect with a network in some manner. Authentication is about knowing where your data comes from, knowing where your software comes from, and establishing a chain of evidence to establish provenance. Any software updates and/or data ingested need to be authenticated to establish that they come from a trusted source. This includes not only ephemeris but also maps, databases, reference station data, and any cryptographic key material essential to operation. The Chips Message Robust Authentication (CHIMERA) concept adds to this a means for authenticating pseudorange measurements that form the basis for position and time estimates.

In connected applications, it is important to recognize that when a receiver reports its position, there are diverse “man-in-the-middle’” attacks that can corrupt or alter data before it gets to its destination. When a ship’s autopilot receives position and velocity reports from a GNSS receiver, how does it know that there is not a data-altering dongle between it and the receiver? Spoofing is an effect, not a method, and cyberspoofing is often a much easier (and more powerful) method compared with RF spoofing.

A detailed discussion is out of scope for this article but much of the public key infrastructure (PKI) protocols and procedures used in Internet communications have direct applicability. Trusted platform modules (TPM) and subscriber identity modules (SIM) such as those used in computers and cell phones can further enhance security. Updates analogous to antivirus protections can maintain a receiver’s ability to recognize threats as they evolve.

In the MOSA paradigm, if subsystems can report problems due to spoofing, jamming, cyberattack, hardware failure, software corruption etc. and, there are performance and security monitors in place to aggregate information and watch for discrepancies, a more effective and resilient response can be mounted.

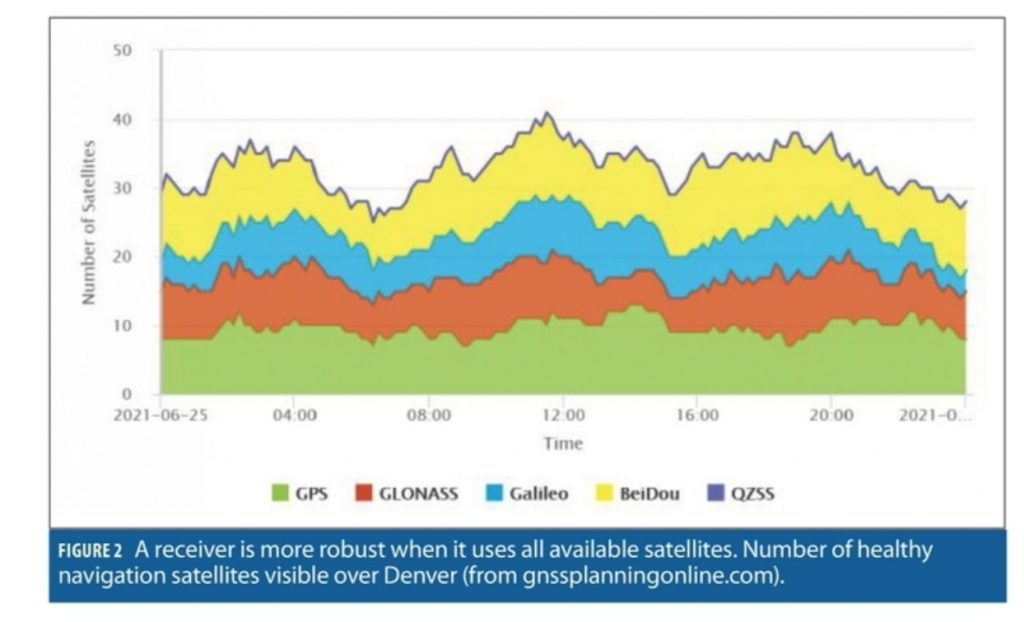

The Need for Trustable Multi GNSS

In the quest for resilience and augmentations, I am not convinced that we as a nation have fully explored how to safely integrate foreign navigation systems into critical applications. Access to more signals and more systems offers considerable resilience potential. Instead, the FCC has unilaterally restricted their use under part 25 rules with limited justification. Perversely, this has led to some US companies flying their satellites under foreign flags so as to gain legal access to FCC-proscribed navigation signals. These same restrictions limit the performance and utility of precision positioning systems, receiver autonomous integrity algorithms, positive train control systems, snapshot RTK processing and spoofing detection processes.

One of the things I found fascinating about the Galileo failure in July 2019 was that the satellites all continued to produce good ranging signals. If you could provide your own ephemeris, say from JPL, NASA, NSWC, and/or other sources, you still got great performance. Treating foreign navigation satellites as “signals of opportunity” and using curated and signed US generated ephemeris strikes me as a powerful and inexpensive augmentation. Much less trust is placed in the foreign state, yet you get a lot of augmentation benefit for minimal cost. Additionally, you limit the impact of a global system’s outage. If Galileo had been the only game in town, its one-week outage would have been catastrophic. As it was, its absence was noted but had almost no effect on GNSS dependent operations.

The Need For An Honest Evaluation of Ligado’s Impact

GNSS really is different from communications. The FCC, by setting a standard where the mechanism of harm is to place GNSS receivers in deep and uncontrolled saturation, ignores the possibility of normally harmless signals mixing and causing harm. None of the testing to date has explored this issue and so, our national policy might be the RF equivalent of mixing alcohol and fentanyl and hoping for the best. Furthermore, the FCC showed almost no cognizance of the importance of GNSS-based remote sensing in monitoring climate change. The RF smog that Ligado’s signals will create restricts our ability to develop a clearer picture of what is happening to the planet. The FCC’s decision needs to be revisited using sound engineering as a basis.

The Need for Situational Awareness

Smoke alarms do not extinguish fires, but by providing an early warning, they allow for a more timely and effective response. Intelligent receivers provide warnings that interference is taking place so users can take corrective actions. With unambiguous statements like “I am jammed and do not know where I am / what time it is,” less time is spent debugging GNSS dependent systems. Multi-sensor systems can avoid ingesting hazardously misleading information (HMI) into their PNT estimation processes and so, can avoid corrupting estimates. Fleet managers will know when an employee is jamming his work vehicle and can have a quiet conversation to correct this behavior.

Building a modicum of intelligence into a receiver is not hard nor is it costly. As a minimum, sudden changes in automatic gain control (AGC) settings are highly indicative of interference. If the ACG is telling you there is a lot of power coming into the receiver’s front end and your C/N0 meters are saying SNR is high, you probably have a spoofer. Add to this a few other simple techniques (see chapter 24 of Position, Navigation, and Timing Technologies in the 21st Century), and the receiver can provide a feature-rich description of the RF environment it is experiencing.

Earlier, I noted that most receivers connect to networks. Aggregating reports from multiple receivers, it then becomes possible to geolocate interference sources and characterize their behavior using crowdsourcing methods. With this more global level of situational awareness, we would now have a much better picture of where the interference hot spots are and a better understanding of the motivations driving their use. Again, early warning of trends provides a basis for a commensurate and less costly response.

Finally, providing law enforcement with accurate jammer locations would allow for enforcement actions, not so much because they want to catch jammers, but because of the criminal activity motivating the jamming.

Augmentations and the Role of Markets

One of the most insidious things about GNSS is its price to the user: free. That, combined with its worldwide coverage and superb accuracy in both time and positioning creates significant barriers to entry for new offerings. In the commercial arena, a new entry that provides only the same services as GNSS, maybe a little better, seems doomed. A successful entrant will need to have a value-added proposition with features that cannot be met using GNSS.

Communications facilities, indoor operation, proof of integrity and location, and uninterruptable service would all be on my short list. In large measure, these capabilities can be provided by combining GNSS with other sensors and systems, especially if we use the full constellation of 125 navigation satellites on orbit and healthy now. That said, I do expect new entrants.

5G NR and 802.11 both have strong potential to meet the requirements of my short list, especially as they move towards higher frequencies. Yes, the ranges there will be short, but infrastructure densities will be high. Both technologies have strong and active initiatives within their standards-setting process oriented towards providing accurate, high-integrity positioning. Also, because they are extant systems, there is less pressure to offer ubiquitous service at inception, a daunting challenge for a brand-new entrant.

I expect LEO satellite systems will also have a role. Because they have high angular rates across the sky, you can get nearly instant-on cm-level positioning. Operating at higher frequencies, eg. X, Ku or even V-band, they can simultaneously provide strong communications capabilities when outdoors and so, might play very well in the autonomous vehicle markets. Yes, the antenna issues are challenging, but they ride a wave of actual deployments.

The Role of Government

So, what is the proper role for government? Well first, stably fund, maintain, and operate GPS. GPS is foundational critical infrastructure, not easily supplanted.

Recognize that GPS signals are extremely weak. Like fish in a river when the river becomes polluted, expect GPS receivers stressed by interference to become less resilient and more prone to unexpected anomalies. Monitoring the health of our spectrum on a continuous basis is of paramount importance, so we can act early and with resolve. Crowd-sensing approaches using intelligent receivers offer an important means to do so, but it will require coordination and open sharing of results.

Providing secure ephemeris and integrity data to support safe use of multi GNSS should be funded. Most of the parts are already in place, and it is mostly a matter of setting up a service offering. Of course, removing FCC roadblocks to its use is also essential. PPP data services should be considered as part of the package to promote rapid adoption of “safe ephemeris.” The same public-facing servers could also provide key materials for civil signal authentication, e.g. fast CHIMERA keys.

Beyond that, government’s role should be one of, dare I say it, leadership. Defining what we want for resilience and what standards of performance are needed in critical applications is only part of the solution. We also need to take action to ensure these standards are met by introducing clear requirements and ensuring necessary infrastructure is available. Developing an integrated infrastructure plan that uses GEO, MEO, LEO and terrestrial components to our best advantage is a necessary step. Government needs to influence approaches not only as a provider of public infrastructure but also as a customer for private infrastructure. Resilience is best achieved as a cooperative undertaking with industry, but absent leadership, nothing is going to happen. Until it does.