August 1994, early morning. Spain’s Central Pyrenees Mountains still in darkness.

At the outset of an ascent to a 3,000-meter peak along the international border, one of the co-authors encounters a group of tourist hikers who have begun searching for a colleague who had left the camp the previous evening. In the pre-sunrise gloom, helicopters cannot yet operate.

A week later, the body of the hiker is found. The rescue efforts came, unfortunately, too late.

August 1994, early morning. Spain’s Central Pyrenees Mountains still in darkness.

At the outset of an ascent to a 3,000-meter peak along the international border, one of the co-authors encounters a group of tourist hikers who have begun searching for a colleague who had left the camp the previous evening. In the pre-sunrise gloom, helicopters cannot yet operate.

A week later, the body of the hiker is found. The rescue efforts came, unfortunately, too late.

If you know a bit about GNSS, inertial navigation systems (INS), remote sensing, and maps, and if you have ever seen an unmanned aircraft in flight, you can only come to the same conclusion as we did when we defined, proposed, and won the CLOSE-SEARCH project to develop an unmanned aerial system (UAS) for search and rescue (SAR) applications.

That is, if navigation can be performed accurately and reliably — and if elevation databases are accurate, up-to-date, and available — then an unmanned aircraft with the appropriate payload can be sent to systematically search for a lost person as soon as that individual is discovered to be missing.

Indeed, light-weight, easily deployable platforms can quickly provide good quality imagery from the air or ground.

Sound like a good idea? It doesn’t just “sound like,” but is actually being confirmed by the increasing efforts among the remote-sensing industry and research institutions. The recent acquisition by Trimble of Gateway, a provider of lightweight unmanned aerial vehicles (UAVs) for photogrammetry and rapid terrain mapping applications, is only one of the many examples of related commercial moves with which many readers are familiar.

Until now, the lack of regulatory support has proved to be a show-stopper for achieving the final boost to UAV commercialization. However, the willingness of regulators seems finally to be turning positive. As reported in the Institute of Navigation’s Winter 2011 newsletter, recent Congressional legislation directs the United States transportation secretary to “develop a comprehensive plan to safely accelerate the integration of civil UAS into the national airspace system as soon as practicable, but not later than September 30th, 2015.” Hence, we are on the way to a future in which robots fly around, cooperating with humans – a future in which robots even search for humans.

The SAR Potential of Unmanned Technology

When a small plane crashes in a remote area, or a fishing boat is lost at sea, or a hurricane devastates a region, or a person simply gets lost while he or she is hiking, SAR teams must scan vast areas in search for evidence of victims or wreckage.

For this purpose, UAVs equipped with remote-sensing devices can be programmed to fly predefined patterns, possibly at low altitudes — 30 to 150 meters — and produce various types of images (thermal, optical, and so forth) captured from their privileged point-of-view. Ideally, this imagery is transmitted real-time back to a ground control station via a data link.

The use of UAVs for the so-called “wilderness SAR” is rapidly evolving. See, for example, the discussion in the article by M. Goodrich et alia listed in the Additional Resources section near the end of this article. The navigation aspects related to the specific platforms operated in those particular missions leaves room for additional research.

CLOSE-SEARCH, a European 7th Framework project, addresses the previously described scenario: a person is lost outdoors, a distress message is generated (either by the person him- or herself or by the person’s companions). In response, an alert is generated by the civil protection authority providing a rough estimation of the area in which the person may remain. That prompts an SAR team to move as close as possible to that area carrying a UAS, composed of a rotary-wing platform and its ground control station (GCS).

The airborne UAS is equipped with vision systems, say, thermal and optical cameras. The goal of the team is to fly the UAV around in search of the missing person and, in case of success, provide precise coordinates for his or her location. At that point, rescue is triggered and fingers are crossed. This operation may need to take place day or night, possibly in adverse weather conditions, and fast enough to minimize the lost person’s anxiety or, ultimately, to safe a life.

When thinking about the key system requirements that enable a UAS to be used for SAR, one realizes that the degree of safety in navigation is crucial. Indeed, when moving from military and governmental applications to the commercial or mass-market level, safe navigation will be revealed as a “must.”

The CLOSE-SEARCH Project

Within the CLOSE-SEARCH project, a hybrid multi-sensor navigation system has been developed, augmenting the standard baseline integrated INS and GPS. The hybrid system couples the INS/GPS with the European Geostationary Navigation Overlay Service (EGNOS) and other navigation sensors, such as barometric altimeters (BA) while also enabling us to explore the use of redundant inertial measurement units (RIMUs).

The project’s goal was to assess the potential of a low-cost, highly redundant system and ultimately demonstrate EGNOS-based UAV control with an integrated EGNOS-GNSS/RIMU/BA solution. Details of the navigation system design can be found in the sidebar article, “Flight Control and Target Identification Requirements and Results with EGNOS and Multi-Constellation GNSS.”

In relation to safety, a key metric was integrity. The project sought to benefit from the integrity monitoring potential brought by the EGNOS system to generate protection boundaries for the unmanned platform.

The idea of incorporating integrity in non-conventional platforms is of increasing interest, and its implementation in unmanned platforms may be even necessary when facing the previously mentioned regulatory processes. It goes like this: you own a UAV that you want to fly around; so, you need to demonstrate that a navigation failure has a (very) low probability of happening, and that the consequences of a failure slipping into your aircraft control system is bounded by a certain predictable amount.

The question is: how far are those of us interested in operating UAVs from reaching that capability? The sidebars, “Requirements for Precision-Based Integrity and Geodetic Quality Control in UAV-Based SAR Missions,” and “Safe Navigation from Hybrid, Redundant Navigation Sensor Systems” explore that question in greater detail based on experience from the CLOSE-SEARCH project.

The CLOSE-SEARCH Prototype

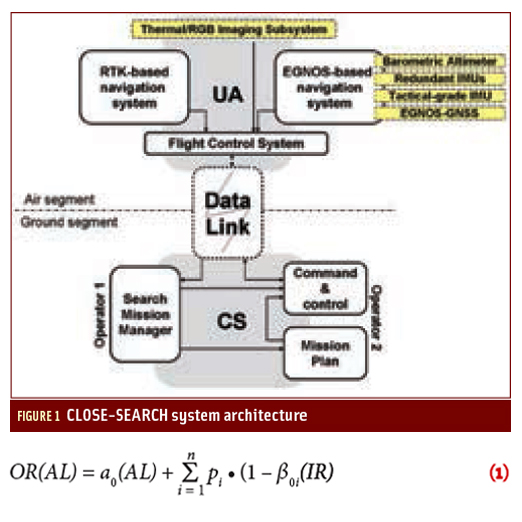

Figure 1 (inset photo, above right) depicts the air and ground segments of our proposed system’s architecture. As is common for UAS architectures, the prototype arises from efforts to integrate imaging, navigation, communications, and mission planning, that is, a system of systems. In the figure, yellow boxes are used to highlight imaging and navigation sensors, and white boxes are used to identify software components on the aerial platform and the ground. The following sections provide further details of the various components.

Aerial Platform, Ground Control Station and Communications. Three fundamental components comprise every UAS: the UAV or drone-, the GCS, and a communication data link between them. In CLOSE-SEARCH, these three components were brought by a project partner, the Asociación de la Industria Navarra (AIN), from Pamplona (Spain). Accompanying photos show the UAV performing during one of the project tests, and an outdoor and indoor view of the GCS.

The UAR-35 is a non-commercial, in-house rotary-wing platform of about three meters length, minimum take-off weight of 75 kilograms, and with an 18-horsepower engine.

The flight control system (FCS), responsible for the platform control, is also an in-house development, and the navigation solution input to the FCS is obtained through a real-time kinematic (RTK) GNSS system incorporating IMU and magnetometer measurements for the attitude determination. As RTK techniques are characterized by providing centimeter-level navigation accuracy, the described RTK-based navigation system was used as a reference for the validation of the EGNOS-based navigation system, with the latter being a specific CLOSE-SEARCH project development.

Due to its high payload capacity (up to 30 kilograms), the UAV that we used was an ideal platform to integrate remote sensing instruments or other navigation sensors.

On the ground, the GCS is mounted on a four-wheel-drive van outfitted with three computers and the appropriate ancillary electronics. A redundant power supply system is mounted in the cargo area.

At the software level, “command & control” software receives the platform telemetry and sends appropriate commands to the aerial platform. Also installed in the GCS vehicle’s computers is the “Search Mission Manager” software, which receives, displays, and enables a photo-interpreter to interact with images from the UAV.

A minimum of two operators monitor and command the UAV to operate the search mission: a “safety remote pilot” and the image interpreter. The two-person crew also drives the GCS vehicle, mounts and dismounts the helicopter, and performs associated logistical tasks.

Mission planning is usually performed off-line and loaded into the GCS, but the same process can be done on-site. Modifications to the UAV waypoints and actions can also be implemented during the mission. Hence, if the operator has seen something of interest in an image, for instance, he can modify the route and re-visit that location — if for nothing else, just to discard false alerts. In order to generate the mission plan, LiDAR-based digital surface models (DSMs) with point density of 0.5 points/m2 were used. These models are available now for the entire region of Catalonia from the Institute of Cartography of Catalonia (ICC).

Two data links are established in the prototype: command & control, consisting of a downlink to transmit the telemetry data from the UAV to the GCS, and an uplink to send commands to the UA; and the payload data link, which provides a downlink to transmit real-time thermal and optical images captured by the aerial platform.

Design of these data links employed two communication architectures to address user requirements. The first and principal architecture was based on line-of-sight (LoS) communications. More precisely, WiFi technology was implemented to support both data links (command & control and payload) due to its suitable performance in terms of range and bandwidth. The particular drawbacks of this technology arise from performance degradation as a function of the UA-GCS distance (tested up to four kilometers with the current system) and also in presence of obstacles that block the wireless signals.

These drawbacks are quite in conflict with SAR missions’ requirements: finding people in difficult-to-access areas where no road access is available often necessitates flying and scanning in mountainous areas in which direct LoS between the UAV and the GCS can be lost. Consequently, a beyond-line-of-sight (BLoS) architecture was also considered to fulfill the SAR mission in such situations.

The candidate technology to support the BLoS architecture was the so-called Worldwide Interoperability for Microwave Access (WiMAX). This technology, based on a wireless communications standard designed to provide 30 to 40 megabit-per-second data rates, has been tested and used in controlled UAV flights to assess its suitability for command & control and payload data transmission and reception. These tests yielded more than satisfactory results and demonstrated potential for future improvement. The interested reader is referred here.

Vision Systems to Detect Human Body Heat. Within a reasonable time after the notification that a person has gone missing, body heat is a key indicator when searching people from above. In addition, in human-unfriendly outdoor scenarios i.e., cold environments, darkness, and so forth, the heat of a person’s body might be the only differentiator when searching for him/her.

But besides thermal vision, being able to “see as humans see” was also requested by SAR operators, as sometimes a piece of clothing, a bag, or other human belongings might provide a crucial hint of a lost person’s whereabouts. Thus, a good complement to thermal vision in search missions is optical vision.

Previous work on combined thermal and color imagery in UAVs has pointed out the difficulty on identifying people in thermal images with trained algorithms due to low resolution of such cameras, blurring caused by halos around thermal targets, and so on. (For further discussion of these points, see the work by P. Rudol and P. Doherty in Additional Resources.)

In our project, the ultimate analysis of images is done by the (human) operator in the GCS, who watches the images in real-time and decides whether a thermal spot and its corresponding colored image represent a lost person. In this, the operator is aided by an automatic feature-highlighting algorithm. If uncertain, the same waypoints can be re-flown just by modifying the mission plan in situ.

Two sensors comprise the remote sensing component integrated on the UAV: a thermal camera — 320 x 240 pixels, focal length of 25 millimeters, 33 x 25–degree field of view (FOV), sensitive in the 8-12 μm spectral range — and a 582 x 500–pixel color camera — accepting lenses of various focal lengths.

In the absence of a priori geometric or radiometric calibration, both cameras were mounted downward-facing on a carbon fiber sheet attached to the payload frame using cup-style isolators. The latter provide vibration isolation for low frequencies down to 10 hertz and exhibit low transmissibility at resonance.

The accompanying thermal images were captured during the first (left) and second (right) campaign. On the left image, with a ground sampling distance (GSD) of 5.5 x 5.5 centimeters, two people lying down are perfectly distinguished against the floor (regular flat ground) during a day test in central Catalonia (November 25, 2011). On the right image, with a GSD of 3.7 cm x 3.7 cm, another person can be recognized against the same type of floor, but this time during a night mission in March, 2012.

A second set shows paired RGB (red, green, and blue) and thermal images captured on the last test campaign of the project. In that particular test, a person stood and waved his arms as though asking for help. These images were taken during daylight hours near Pamplona (February 28, 2012), and have a GSD of 7.3 x 7.3 centimeters for both the thermal and color images. The full video footage can be seen on the Internet at the project’s YouTube channel, featured in the section, “About the CLOSE-SEARCH Project.”

Safe Navigation for Low-Altitude Unmanned Aerial Missions

In an ideally well-organized and technologically advanced world, the integration of UAS into non-segregated airspace would be feasible. UAVs would coexist with manned platforms and cooperate seamlessly with ground teams. However, neither technology nor regulations — nor, probably, our own minds — are yet there. Among other factors, sense-and-avoid and safe navigation capabilities are needed for that.

In our context, safe navigation refers to real-time positioning with a low probability of failure and within spatial boundaries defined by low-altitude search requirements. In practice, this “low failure probability” should translate into a “navigation never fails” de facto reliability. Moreover, in an ideally technologically advanced world, a single perfect navigation instrument would suffice.

In practice, we approximate a perfect navigation instrument by extending our INS/GNSS low-redundant baseline system with a highly-redundant multi-sensor system. More specifically, redundancy can be added by using GNSS augmentation systems (EGNOS in the European region), using other available GNSSes (GLONASS) or planning to use future GNSSes (Galileo, Compass). We could also build redundancy by replacing high-end tactical-grade IMUs with four MEMS-based IMUs or by adding magnetometers and baro-altimeters.

These instruments are usually complemented with short-range sensors in support of specific UAV maneuvers, such as take-off and landing. Last but not least, we note that “safe” or “low failure probability” shall be quantified in the context of the CLOSE-SEARCH application requirements.

Generally speaking and in classical geodetic terms, “augmenting” a system or adding redundancy to it translates into higher precision and reliability. If the redundant measurements are correctly modeled, that will also result in higher accuracy. In the language of navigation, more redundancy translates into higher accuracy and a smaller integrity risk (IR).

We define the operational risk (OR) as the probability of occurrence of a situation by which safety is threatened for a particular operation. In other words, OR can be understood as the combination of navigation-related factors leading to unacceptable precision (or accuracy, in absence of model errors and measurement outliers) or to a loss of integrity. Note that OR is a combination of the precision-related risk, as precision is the metric of accuracy in nominal non-faulty conditions, and the integrity risk, which is the probability of missed detection of faults causing a hazardous error.

More specifically, we distinguish two situations contributing to the OR 1) under nominal conditions, the error of a navigation state (position, velocity, or time) exceeds some tolerance or alert limit (AL) that is regarded as hazardous for the particular application, or that 2) a failure event has occurred and gone undetected — misdetection — with an effect on the navigation solution that exceeds the AL. Mathematically, given an IR,

Equation (1) (inset photo, above right)

where:

- a0(AL) is the probability that, under nominal fault-free conditions, the navigation state error hazardously exceeds the tolerable limits (related to the system’s PVT precision),

- pi is the probability of occurrence for each element ei of the Failure Types set F = {e1, …, en} (related to the navigation sensor or system’s failure rates)

- 1 – β0i(IR) is the probability of type II error (missed detection, related to the system’s reliability)

For the given specification AL and IR, an ideal system verifies equation (1) at the lowest price, weight, electrical power consumption, and so forth.

Safe navigation in CLOSE-SEARCH can thus be regarded as an exercise to identify correct requirements and to design an ideal system accordingly, that is, to set integrity requirements — among others — for our SAR application domain and vehicle. The project has also sought to approximate the ideal system through a hybrid navigation system including redundant IMUs and other sensors, and, last but not least, to benefit from EGNOS and multi-constellation configurations for platform navigation and target identification tasks.

Lessons Learned about Use of Unmanned Platforms in SAR Operations

We thought we might compile our experiences on the use of unmanned platforms in search operations as a concluding section. These comments are a result of the close work with some SAR teams who would eventually need these platforms and who provided valuable inputs for the project. Of course, we also provide observations in case any interested reader is inclined to jump on this business opportunity.

1. The three famous words that describe the nature of UAV operations (dull, dirty and dangerous), especially hold true when describing SAR missions. SAR teams ask for autonomous machines operating continuously day and night, on long missions, and in adverse weather conditions. This is definitely a challenge for UAV manufacturers willing to explore the SAR field. Within the project, a short demonstration of a night flight was performed, which probably represents a case in which UAVs would provide service where others simply could not.

2. Nowadays, RTK is the most common GNSS processing scheme implemented in UAVs. But in SAR-like missions (navigating behind mountains, or at several hundreds of kilometers away, or using mobile control stations), LoS-dependent RTK systems would simply fail to meet the mission requirements. In contrast, satellite-based augmentation system (SBAS) solutions such as EGNOS or the U.S. Wide Area Augmentation System (WAAS), offer acceptable performances independent from local stationary setups or dedicated communication links, as required by RTK. In addition, the use of integrity may push the start-button for regulating safe operations in any open-air environment.

3. One of the issues related to certifying UAV operations is the sense-&-avoid capability. Without a human onboard, unmanned aircraft lack a built-in capability to avoid mid-air collisions, when radar coverage is absent or transponders are inoperable or not installed, and to avoid also ground obstacles (basically, manmade structures). Conceivably, this latter issue could be addressed by the use of LiDAR-based DSMs during the mission planning component of the system. Discussion was raised about the need of DSMs to contain updated information about existing infrastructures (powerlines, buildings, etc.) — thus, cartography providers should generate suitable updated products as a first step towards a complete reliability concept for UAVs (sense-&-avoid plus navigation integrity)

4. The trend is to go smaller and smaller — for technology, in general, and sensors in particular. This is in line with the miniaturization process that UAVs are also experiencing, as smaller payloads require smaller equipment and sensors. Vision sensors nowadays achieve centimeter-level GSDs shooting at acceptable frequencies (up to one hertz), enabling application into more demanding fields such as geomatics. Improvement is also present for generic infrared sensors (thermal, night vision) which is of interest for surveillance applications and extensive to SAR. This paper’s results are just a starting point for further investigation of UAV-based thermal vision.

5. Many applications were pointed out by SAR users as possibly suitable for UAV-based systems, which might be interesting from a business perspective — a system operating transversally, i.e., in both SAR and non-SAR operations, could easily reach a satisfactory point of return on investment. Examples of these applications include agricultural management, detection and response to forest fires, aerial pollution monitoring, aerial road traffic control, and sea search missions, among others.

6. UAV motion is quite constrained in small- or medium-sized rotary-wing UAVs, especially in surveillance-type missions (low speeds, near-zero pitch and roll values, smooth transitions between flight phases). However, our platform’s engine (one-cylinder, two-stroke, air-cooled) operating at full power proved to be a source of high-frequency, high-amplitude vibrations, which severely affected the IMU measurements. Efforts have been added to the project to carry out further IMU modeling for highly vibratory environments. Much work also still remains to achieve optimal sensor fusion performances.

About the CLOSE-SEARCH Project

The research leading to these results is framed on the CLOSE-SEARCH project website and has been funded by the European Community Seventh Framework Programme under grant agreement no. 248137, managed by the European GNSS Agency (GSA), in response to the call “Use of EGNOS Services for Mass Market: Innovative Applications targeted to SMEs”.

CLOSE-SEARCH has been carried out by a heterogeneous yet complementary consortium led by a research center (Institute of Geomatics) and including a private non-profit technology center (Asociación de la Industria Navarra), the Geodetic Engineering Laboratory (TOPO) of the Ecole Polytechnique Fédérale of Lausanne (EPFL), an aerospace engineering company (DEIMOS Engenharia), a public research agency and geospatial data provider (Institute of Cartography of Catalonia) and an end user (the Catalan Civil Protection Authority).

Additionally, a User Advisory Board was created consisting of representatives from teams involved with real SAR operations. The board helped assessed the development of the project by providing their opinions and counsel. Within the board, various fields were represented, including mountain rescue, sea rescue, and firefighters. More information on the board and its members can be found on the project website.

The authors would like to specially thank all the people involved in developing the project. Finally, the reader is welcome to visit the CLOSE-SEARCH YouTube channel where some videos of the prototype testing and imaging results can be found.

Additional Resources

Goodrich, M., and J. L. Cooper, J. Adams, C. Humphrey, R. Zeeman, and B. G. Buss, “Using a Mini-UAV to Support Wilderness Search and Rescue: Practices for Human-Robot Teaming,” Safety, Security and Rescue Robotics proceedings, pp. 1–6, 2007

Molina, P., and I. Colomina, “Integrity Aspects of Hybrid EGNOS-based Navigation on Support of Search-and-Rescue Missions With UAVs,” Proceedings of the ION GNSS 2011, September 19–23, 2011, Portland, Oregon, USA

Pullen, S., and T. Walter, and P. Enge, “SBAS and GBAS Integrity for Non-Aviation Users: Moving Away from Specific Risk,” Proceedings of the 2011 International Technical Meeting of The Institute of Navigation, January 24–26, 2011, San Diego, California, USA

Roturier, B. and E. Chatre and J. Ventura-Traveset, “The SBAS Integrity Concept Standardised by ICAO. Application to EGNOS”, ION GNSS 2001, September 11–14, 2001, Salt Lake City, Utah, USA

Rudol, P., and P. Doherty, “Human Body Detection and Geolocalization for UAV Search and Rescue Missions Using Color and Thermal Imagery,” IEEE Aerospace Conference, March 1–8 2008, Big Sky, Montana, USA

Waegli, A., and J. Skaloud, S. Guerrier, M. E. Parés, and I. Colomina, “Noise Reduction and Estimation in Multiple Micro-electromechanical Inertial Systems,” Measurement Science and Technology, 21:065201 pp.11, April 2010