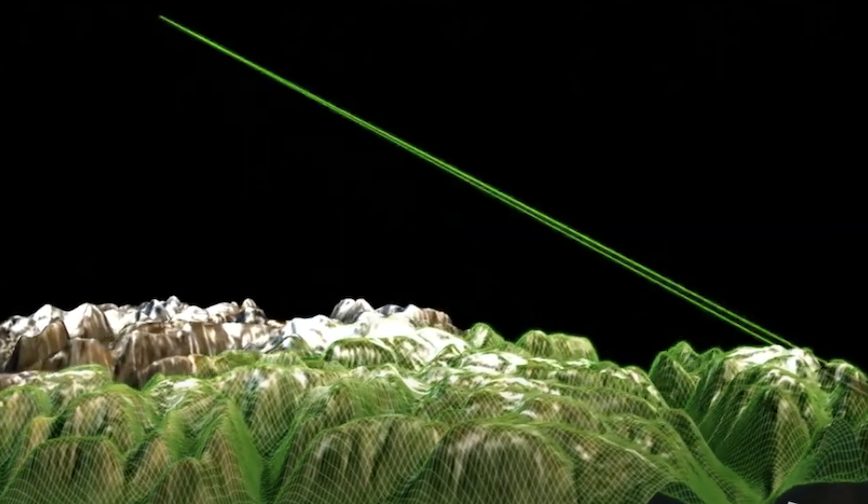

Leidos showcased its system for airborne navigation in GPS-denied environments at the recent Association of the United States Army (AUSA) 2020 conference in Washington, D.C., October 11–13.The system, called Assured Data Engine for Positioning and Timing (ADEPT), compares the high-resolution camera view below a plane to a detailed satellite map. ADEPT can almost instantly match the view to an exact spot on a map, yielding a precise fix in real-time, according to the company. The system also works for military mounted and dismounted applications and for weapons systems.

“It’s doing just what a pilot would do—looking down at the ground to recognize landmarks,” explains Dr. Jonathan Ryan, who manages vision-based navigation for the Position, Navigation, and Timing (PNT) programs at Leidos. “But it’s doing it much faster and more accurately, and it can do it anywhere.”

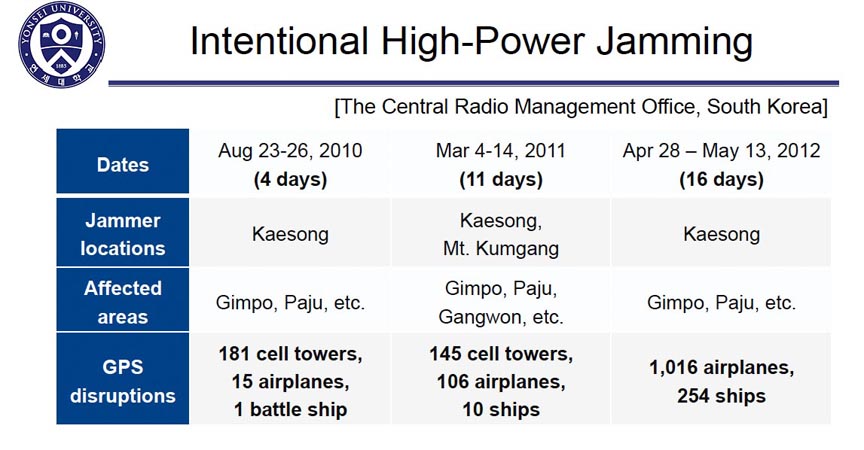

For military airborne missions, loss of accurate GPS in the highly contested international theater — jammed or spoofed — poses a critical threat. Even a tiny navigation error can make it difficult to avoid threats, find targets, and complete missions safely.

ADEPT takes further advantage of heading, airspeed, and a video feed from equipment is usually already on hand in most military aircraft.

With machine learning and other sophisticated software techniques, ADEPT can recognize landmarks and terrain even when human eyes would have trouble distinguishing identifying details, including over desert, unbroken forest, or salt flats. It can do so when flying through or over clouds, as long as it can grab brief glimpses of the ground through occasional holes in the cloud cover.

At night, the system can rely on infra-red imaging, or use stars and other celestial objects to get a fix. Even map images that have been rendered obsolete by the addition or destruction of buildings and roads don’t keep ADEPT from recognizing the right spot. “It doesn’t need an exact match, as long as some of the details in the area remain the same,” says Ryan.

The system can provide precise navigation information even above extended, solid cloud cover or flying far out over the ocean. By pulling in data from other standard sensors on the aircraft — including airspeed indicators, barometric altimeters, and inertial measurement devices — and adding in any signals it can pick up from radio and cellphone towers or communications satellites, the system can usually continue to update position with good accuracy until it can get a more precise update from the next glimpse of the ground.

In the future, radar image recognition will remove even solid cloud cover as an impediment.

ADEPT relies on a sensor fusion engine developed by Leidos. ADEPT can be quickly and deployed on most airborne platforms and doesn’t rely on any specific hardware, according to Leidos. It runs on almost any type of available on-board computing platform, from a flight computer to a cell phone processor, which ensures it can also run on small drones and guided munitions, in addition to larger manned and unmanned systems.

ADEPT provides collaborative navigation, enabling multiple vehicles and even individual personnel, whether in the air or on the ground, to share sensor and position information. ADEPT can then piece together the information to provide each member of the group with their location and its uncertainty. “It might even be a drone that can fly outside the GPS-contested environment,” says Leidos engineer Chris Yeager, who helped develop the system. “Then it can give everyone else their position and velocity.”

The system meets U.S. Army goals of deploying aircraft that can extend electronic sensing deep into the threat environment in order to deliver long-range precision fires, while remaining outside the range of air defense artillery. The speed and unpredictability of conflict today also requires an aircraft that can quickly get to a hot spot from a distant base. At the same time, the aircraft must be able to loiter on station for hours on end — regardless of local weather or GPS jamming conditions.